I sponsored the testing competition at CAST, last week, awarding $1,426.00 of my own money to the winners.

My game, my rules, of course, but I tried to be fair and give out the prizes to deserving winners.

There was some controversy…

We set it up with simple rules, and put the onus on the contestants to sort themselves out. The way it worked is that teams signed up during the day (a team could be one tester or many), then at 6pm they received a link to the software. They had to download it, test it, report bugs, and write a report in 4 hours. We set up a website for them to submit reports and receive updates. The developer of the product was sitting in the same ballroom as the contestants, available to anyone who wished to speak with him.

I left the scoring algorithm unexplained, because I wanted the teams to use their testing skills to discover it (that’s how real life works, anyway). A few teams investigated the victory conditions. Most seemed to guess at them. No one associated with the conference organizers could compete for a prize.

During the competition, I made several rounds with my notebook, asking each team what they were doing and challenging them to justify their strategy. Most teams were not particularly crisp or informative in their answers (this is expected, since most testers do not practice their stand-up reporting skills). A few impressed me. When I felt good about an answer, I wrote another star in my notebook next to their name. My objective was partly to help me decide the winner, and partly to make myself available in case a team had any questions.

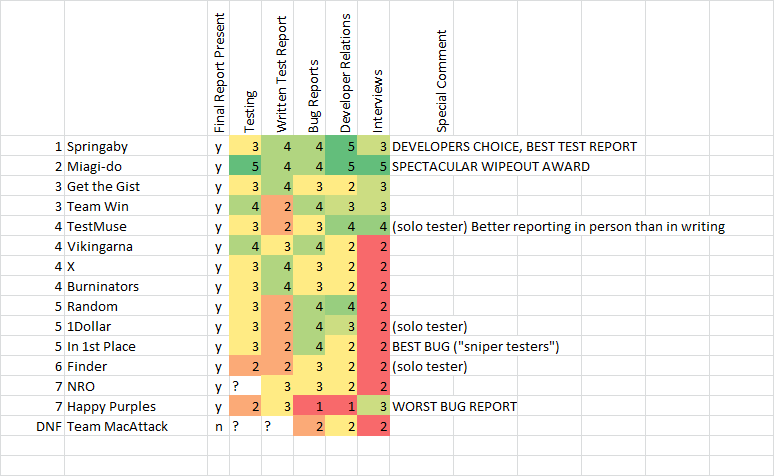

David reviewed the 350 bug reports, while I analyzed the final test reports. We created a multi-dimensional ordinal scale to aid in scoring:

Awards:

- Worst Bug Report: Happy Purples ($26)

- Best Bug Report: In 1st Place ($400)

- Developer’s Choice Award: Springaby ($200)

- Best Test Report: Springaby ($800)

These rankings don’t follow any algorithm. We used a heuristic approach. We translated the raw experience data into 1-5 scales (where 5 is OMG and 1 is WTF). David and I discussed and agreed to each assessment, then we looked at the aggregates and decided who would get the awards. My final orderings for best test report (where report means the overall test report, not just the written summary report) are on the left.

Note: I don’t have all the names of the testers involved in these teams (I’ll add them if they are sent to me).

Now for the special notes and controversies.

Happy Purples

Happy Purples won $26 for the worst report made of a bug (which was actually for two bug reports: that it was too slow to download the software at the start of the competition AND that a tooltip was inconsistent with a button title because it wasn’t a duplicate of the button title).

The Happies were not a very experienced team, and that showed in their developer relations. I thought their overall bug list was not terrible, although it wasn’t very deep, either. They earned the ire of the developer because they tried to defend the weird bug reports, mentioned above, and that so offended to David that he flipped the bozo bit on them as a team. Hey, that’s realistic. Developers do that. So be careful, testers.

TestMuse

Keith Stobie was the solo tester known as TestMuse. He was a good explainer when I stopped by to challenge him on what he was doing, but I don’t think he took his written test report very seriously. I had a hard time judging from that what he did and why he did it. I know Keith well enough that I think he’s capable of writing a good report, so maybe he didn’t realize it was a major part of the score.

In 1st Place

They didn’t report many bugs (9, I think). But the ones they reported were just the kind the developer was looking for. I don’t remember which report David told me was the bug that won them the Best Bug Report award, but each bug on their list was a solid functionality problem, rather than a nitpicky UI thing. We called these guys the sniper testers, because they picked their shots.

Springaby

A portmanteau of “springbok” and “wallaby”, Springaby consisted of Australian tester Ben Kelly and South African Louise Perold. Like “Hey David!”, the winner of the previous CAST competition (2007), they used the tactic of sitting right next to the developer during the whole four hours. Just like last time, this method worked. It’s so simple: be friendly with the developer, help the developer, ask him questions, and maybe you will win the competition. Springaby won Developer’s Choice, which goes to David’s favorite team based on personal interactions during the competition, and they won for best test report… But mainly that was because Miagi-Do wiped out.

Note: Springaby reported on of their bugs in Japanese. However, the developer took this as a jest and did not mark them down.

Miagi-Do

Miagi-Do was kind of an all-star team, reminiscent of the Canadian team that should have won the competition in 2007 before they were disqualified for having Paul Holland (a conference organizer) on their side. This time we were very clear that no conference organizer could compete for a prize. But Miagi-Do, which consisted mainly of the friends and proteges of Matthew Heusser (a conference organizer) decided they would rather have him on their team and lose prize money than not have him and lose fun. Ah sportsmanship!

The Miagi-Do team was serious from the start. Some prominent names were on it: Markus Gaertner, Ajay Balamurugadas, and Michael Larsen, to name three. Also, gutsy newcomers to our community, Adam Yuret and Elena Houser.

Miagi-Do got the best rating from my walkaround interviews. They were using session-based test management with facilitated debriefings, and Matt grilled me about the scoring. They talked with the developer, and also consulted with my brother Jon about their final report. I expected them to cruise to a clear victory.

In the end, they won the “Spectacular Wipeout” award (an honorary award made up on the spot), for the best example of losing at the last minute. More about that, below.

The Controversy: Bad Report or Bad Call?

Let’s contrast the final reports of Miagi-Do and Springaby.

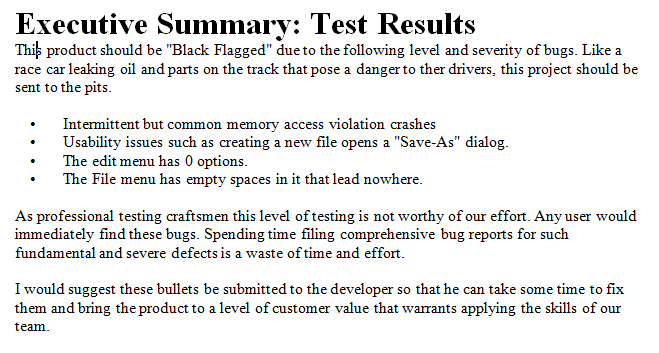

This is the summary from Miagi-Do. Study it carefully:

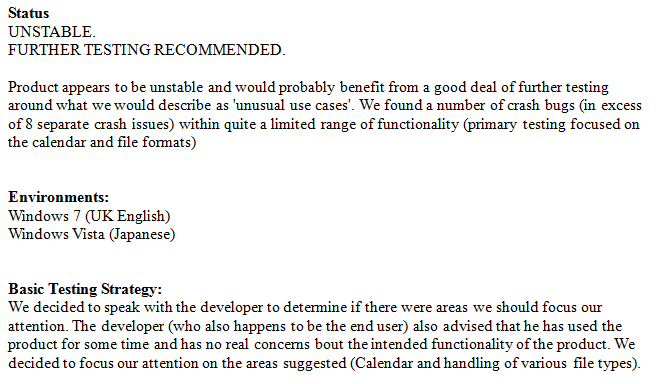

Now this is the summary from Springaby:

Bottom line is this: They both criticize the product pretty strongly, but Miagi-Do insulted the developer as well. That’s the spectacular wipeout. David was incensed. He spouted unprintable replies to Miagi-Do.

The reason why Miagi-Do was the goat, while Springaby was the pet, is that Springaby did not impose their own standard of quality onto the product. They did not make a quality judgment. They made descriptive statements about the product. Calling it unstable, for instance, is not to say it’s a bad product. In fact, Springaby was the ONLY team who checked with David about what quality standard is appropriate for his product. The other teams made assumptions about what the standard should be. They were generally reasonable assumptions, but still, the vendor of the product was right there– why assume you know what the intended customer, use, and quality standard is when you can just ask?

Meanwhile, Miagi-Do claimed the product was not “worthy” to be tested. Oh my God. Don’t say things like that, my fellow testers. You can say that the effort to test the product further at this time may not be justified, but that’s not the same thing as questioning the “worthiness” of the product, which is a morally charged word. The reference to black flagging, in this case, also seems gratuitous. I coined the concept of black flagging bugs (my brother came up with the term itself, by borrowing from NASCAR). I like the idea, but it’s not a term you want to pull out and use in a test report unless everyone is already fully familiar with it. The attempt to define it in the test report makes it appear as if the tester is reaching for colorful metaphors to rub in how much the programmer, and his product, sucks.

Springaby did not presume to know whether the product was bad or good, just that it was unstable and contained many potentially interesting bugs. They came to a meeting of minds with the developer, instead of dictating to him. Thus, even those both teams concurred in their technical findings, one team pleased their client, the other infuriated him.

This judgment of mine and David’s is controversial, because Adam Yuret, the up and coming tester who actually wrote the report, consulted with my brother Jon on the wording. Jon felt that the wording was good, and that the developer should develop a thicker skin. However, Jon wasn’t aware that Miagi-Do was working on the basis of their own imagined quality standard, rather than the one their client actually cared about. I think Adam did the right thing consulting with Jon (although if they had been otherwise eligible to win a prize, that consultation would have disqualified them). Adam tried hard and did what he thought was right. But it turns out the rest of the Miagi-Do team had not fully reviewed the test report, and perhaps if they did, they would have noticed the logical and diplomatic issues with it.

Well, there you go. I feel good about the scoring. I also learned something: most testers are poorly practiced at writing test reports. Start practicing, guys.

Hi James,

I just gotta say that the Heuristic Evaluation Algorithm is *pure gold*! XD I’m gonna read the posting more thoroughly and make comments and critisism (and which I beg to get in return!). I wish I could have persuaded my boss to sent me to CAST but you can’t get everything, can you? But I hope some rich tester (or a poor like me) could arrange similar workshops in Finnish conferences. But rest assured, I’ll be back!

Interesting post, one that highlights to me a problem that many testers have, especially once they become more experienced – believing that a product shouldn’t be shipped if it doesn’t match the testers standard for quality, whatever that standard is at that moment in time which depends, amongst other things if you got out of bed with the left or right foot in the morning. I’m not excluding myself here as I fell into that trap before and will most likely fall into it again.

We (as testers) seem to have no problem writing or talking about adjusting the quality needs to our customers or project sponsors but if push comes to shove these needs are ignored and we mutate into quality gatekeepers once more which I find fascinating.

I’m looking at this page in Firefox 6 and note that there’s a horizontal scroll bar displayed. Is this page poor quality? Of course not. Could it have been prevented? Yes. Do I feel good about having spotted it? Well, there’s the question – do I feel good because I spotted a weakness in someone else’s work or because I now have the chance to send the owner of the page a quiet message and noting the small problem which doesn’t distract from the page’s message thereby helping out?

I’d usually would’ve done the latter but wanted to prove my point, there’s always something to find so it’s up to the tester in question to decide what to do with that information. And yes, it can be a struggle not to be smug about finding problems as we can see in this example from people, all of whom I respect a lot.

Thanks for sharing this.

I believe that a good developer relationship is an important part of testing. However, I have never cared what the developer thought about what was shippable or not. The customer pays for it and the end user has to work with it. Their opinions about what works count much more than the person who wrote it. You should have had a customer around for the testers to talk with.

[James’ Reply: This conference was about context-driven testing. An important bit of context that applied in this case was that David had created the product for his own use. He was not intending to sell it to anyone. He was the user. Testers who recognized that were bound to do well.

A tester has to know whom he is serving. If you are hired by one guy and decide that you serve another guy, you must expect to annoy your client.]

James,

Thanks for sharing this.

I agree to your points w.r.t. good relationship with developers and focus on test reporting skills. There’s a lot to learn from this post and imbibe into what I do as a tester.

CAST2011 was my first testing conference despite having been a software tester for the last 8 years. As such, this was my first testing competition (I was one of the 6 members of the Burninators. I have no idea if any of the members knew each other before but this was my first time meeting them all.) It was eye opening, for a whole host of reasons. I assumed I had no assumptions going in since I had no idea what to expect of the competition. That was my first major error. As James points out, I made a critical, flawed starting assumption that I knew what level of quality the software was trying to achieve. I also made a whole host of assumptions about the rules and end game of the competition. In many ways it was like I opened a box of Monopoly that had no enclosed rule sheet and just decided to play it in whatever way I felt. That may be an interesting exercise but it did not help us compete effectively in a contest that did have rules and winning conditions (albeit unexposed ones).

[James’ Reply: You’re learning how to be in a testing competition though. And we’re learning how to put on a competition.]

Taking a step back, this was one of the better learning opportunities from a conference full of great learning opportunities. By creating a contest designed to work, as James says, “how real life works”, the organizers created a crucible for testers to explore how they approach their craft and expose their own biases, weaknesses and strengths. I have a great deal more clarity now on what skills I need to develop further. In fact, I would recommend that next time the effort goes even further and an entire session the next day of the conference be devoted to a post-mortem of the contest. I suspect the debate would be lively.

[James’ Reply: Hmm, a post-mortem would be a great idea.]

I have one other minor suggestion for any future contests. Do not allow permanent seats next to the developer. As testers we have now noticed the repeatable pattern that the team that sits with the developer wins. Every team serious about winning will be shooting for that spot next time. But physical limitations of space mean just one, maybe two, teams at any future contest will get that luxury. (Why do I have the feeling this request will be met with the response: “works as designed”. )

[James’ Reply: I was prepared for that. Had I received any complaints about that (or seen any trouble with it) we would have put a system in place to deal with it fairly. (Probably by allowing each team to post a single tester near David.) But since David wasn’t all that popular during the proceedings, this was an indication that the other teams were not interested in the scarce resource of space near the programmer.]

Thanks again for an enlightening evening and conference.

Phillip Seiler

PS The Burninators listed all our members in our test report so you can include our names (assuming nobody else on the team minds. I don’t.) Personally, I consider including names an essential element of the report as it gives the developers a person rather than an entity (QA, Testing Dept, etc…) to work with. As you note, the human relation side of testing is one we can all work to improve.

Great summary report.

Firstly let me congratulate the Springaby team. They clearly wrote a well thought out and diplomatic summary report and outperformed every eligible team in the running to a well deserved victory.

While I agree with almost everything here, I intend to blog my perspective on this challenge soon.

I think Jon has some valid points about sensitivity of the developer. Of course there also may be contexts here yet to be uncovered. I will fully agree that the wording is unfortunate given it’s ability to be easily misinterpreted. I think what I had originally written was too delicate and academic but where we landed was definitely too far in the other direction and given more time we might’ve come to a middle ground.

All of that said I think there’s a worthy discussion about the hierarchical social roles of developers and testers, as well as the value of verbal communication and collaboration versus the written word to be had and I look forward to it.

Thanks again for including me in the conference, it was an honor and a privilege to be in the company of so many intelligent leaders in our craft.

[James’ Reply: We wanted to run a fully authentic competition, to the degree possible in four hours. Part of that is dealing with the feelings of the programmer. However, in this case, I concurred with the programmer.

Remember, it wasn’t JUST his feelings. What triggered his feelings was an unwarranted presumption you were making. Your mission was NOT to provide your own personal opinion of the quality of the product, but rather to test in a professional way. We testers must understand who our stakeholders are, or else we won’t be very popular in our work.

In the case of Happy Purples, I think David was perhaps too harsh. They reported some reasonable bugs along with their silly ones.]

Do you think that the Miagi-Do test report from the testing competition was an accurate reflection of what those testers would do in the real world? Or could it have been more tongue-in-cheek due to the testing conference environment? I wasn’t present at CAST and so did not participate or have a sense of the atmosphere during the competition, but just reading over the Miagi-Do excerpt here I get the feeling that this was more grandstanding and not the way the testers would present themselves in a professional context.

[James’ Reply: They were very serious, I would say. As I said, I was impressed. It’s the sort of thing that I also would do (and have done) in real projects (see my recent post about paired exploratory surveys). But you have to remember that a one time test cycle is going to work differently than a longer term project. If this had been a 10 day competition, I’m sure we would have seen more of a marathon mentality.

Also, if we were to do five more of these competitions, in short order, I suspect everyone would settle down into a generally professional strategy. Each team was groping for an approach that would do them credit, in this isolated exercise.]

@Claire: We were indeed very serious. The Black flag, however was very much a hypothetical. It went from being a gently worded call for acceptance collaboration to a more aggressive hypothetical invoking of a black flag. One comment was made that we thought the product was beneath us to test. We /did/ test it, filed 45 bugs that were not challenging to uncover. In a business context I’d have had a personal conversation suggesting we meet and highlight some general areas to uncover an acceptance criteria list. I always think it’s a bummer to be mired in “B-time” (to use SBTM parlance) and it can feel a great deal like clerical work and less like skilled technical testing. Of course bug reporting is part of a tester’s job, but at some point I find it’s better to identify acceptance criteria than to fill the bug DB with the results of each click. As I said, there were more contexts involved that I’ll get into later. 🙂

I can say without question in a non-hypothetical business context I would never send anything worded that strongly to a stakeholder, especially a sensitive one with whom I have no personal relationship.

Of course more to come in the blog once I dig myself out of this epic undying project. 🙂

To dull the sharp sword of blaming Miagi-Do could have used words like “it seems to me”, “in my opinion”, apparently”, etc. All observations are to be projected against the testers beliefs and expectations, not against “universal” truths about software quality (if there are any).

“When I use software I expect the software to blah-blah-blah–” “Well this thing works differently.” “OK. I’ll adjust my expectations with this particular software.”

The use of metaphors is accepted in context where it IS accepted, and where there is mutual trust that the tester is not trying to undermine the developer. And metaphors are extremely effective in explaining the tech-language to a customer with less technical skills. When in context where the tester doesn’t know the customer or the developers the testers job is to build that understanding which quality criteria are important and what is the way to communicate to stake holders. Be they IEEE standard documents or power point slides.

you’re absolutely right – writing reports manually is not something most testers are skilled at…

Anyway, I enjoyed reading the post – and the whole idea of a testing competition sounds great to me. I think it’s something every large company should apply!

Hi James,

Team MacAttack didn’t finish or compete on purpose. It was just me on one machine messing around with 2 friends from Indiana. We were too tired and chose wine & chatting about old days over serious competition. We did go write some reports and such, talking about exploratory techniques and so on.

[james’ reply: That’s fine. You signed up officially, so you get to be scored.]

A few considerations for future competitions: 1. Having never competed in any test competitions, is this a normal duration? We felt this was late for some participants, and with the chance to test together on our own terms so tempting, we threw in the towel.

[james’ reply: It’s the minimum duration I think is reasonable for a “test something you’ve never seen before” competition.]

Disqualified: DNF is a great status. We participated only to get a better idea of what the testing competition was about. It was good to learn more about what was considered important.

In terms of 3 females with 1 Mac on VMWare Fusion, the vote was for wine and sleep over serious competition. I’m still glad there was a competition and I hope in the future that casual competition for other purposes will be allowed.

[james’ reply: I don’t understand. You DID compete casually. It WAS allowed. But next time, just don’t sign up officially, or else tell me that you don’t want to be included on the scoring.]

Thanks,

Lanette

Thanks,

Lanette

Thanks! My point was, I had many invalid expectations for the testing competition as a complete newbie. I was expecting a browser based application of some flavor so that more participants/devices would be applicable. I learned otherwise after signing up. I expect I won’t be the last ill informed participant. 🙂

[James’ Reply: I wanted an app that would not require the network.]

The point of casual competition for learning other things was not a criticism, just a statement that it was of value to me, and for that reason my vote is for that practice to be continued (that people can casually participate if they can finish or not, to get when they can out of it).

[James’ Reply: I’ll try to make it clearer next time that casual participation is welcome.]

Thank you James, for a good competition. It certainly met my expectations, which were to get to know a few people by actually doing the work, to learn some things, and to have a pleasurable experience while doing it.

In those terms, it was a home run.

One more value that we haven’t discussed much is a seecrit side benefit of these kinds of competitions: You get to experiment in a very low-risk way. For example, the Miagi-Do team was /entirely/ self-organized. We didn’t have a manager. We ran, full-out, to do the best testing we could in the time we could.

[James’ Reply: That’s true.]

After the conference, one of the things I’ve learned in discussions with the team is that we /all/ had concerns that could have mitigated some risk, but each of us was ‘sticking to our knitting.’ Perhaps because the team had not worked together; perhaps our level of trust was just a bit too high.

In the end, we experimented, learned a thing or two, and will be a better team for next time.

If that’s not a win, I’m not sure what is. It sure beats a couplea hundred bucks with no learnings, at least in my book. 🙂

[James’ Reply: Absolutely!]

Is there an outline or template that you use for writing test reports? Since you say that most testers are very bad at writing test reports and that we should practice, can you give us a complete example of a well written report?

Good information. Thanks

Chris

[James’ Reply: I don’t use templates. But you can find examples in the appendices to my class, which you can download from the front page of my website.]

One of the topmost interesting blog entries for me. Congratulations to the best and worst teams and all the participating teams. And special thanks to the ‘Miagi-Do’. Actually, many of us can learn if we just follow the opposite best practices of what ‘Miagi-Do’ did.

The best part for me is

<>

🙂

“Actually, many of us can learn if we just follow the opposite best practices of what ‘Miagi-Do’ did.”

@ A.M. Iftekharul Alam: I don’t think you know what we did. The report is just one aspect of the contest. The perspective from a participant’s view: http://enjoytesting.blogspot.in/2011/08/cast-2011-tester-competition-miagi-do.html

I guess one of my comment parts is missing because of the ‘Angular Brace <‘ . May be it is a bug for this site. (:)) Whenever the comments are in between two consecutive ‘left angular brace ‘, then only one from each are being shown and the comments get invisible.

[James’ Reply: Post a better comment and I’ll replace the older one with it.]

Hi, James,

I read your report and the comments with considerable interest, particularly the report and comments on developer treatment.

I may have lost a client of two years because the last report I wrote, and I write detailed reports, was critical of developers who made the same mistakes I had been reporting for two years. I think through my frustration I lost some of my professionalism and that may have cost me, professionally and financially.

I thought I would pass this along to emphasize the importance of treating developers with kid gloves; Though they themselves may react unprofessionally at times, that does not give us the right to reply in kind.

Keep up the good work,

Sorry I missed the show, (I corrected the typo, ‘shoe’. Anyone remember Ed Sullivan?)

Alan

Alan: It’s hard to know how to diplomatically yet meaningfully convey that developers are making the same mistakes they were two years ago. I think I’d be hard pressed to do it smoothly. Maybe Jerry Weinberg could do it, but even he might do it via some “soft channel” rather than in written form.

Nobody bats a perfect 1.000.

Perhaps James has some thoughts he could share with you sometime one-on-one via Skype.

Cheers, MMB

What I find interesting is that there was no scoring or adjustment based on time or resources. Maybe I’m jaded since I was one of the few solo teams (after being denied entry into 3+ groups who “didn’t want any more people” – I had no idea there were other solos!). Anyway, as a team of 1, I felt I wasn’t getting much value in the exercise. My original objective of wanting to participate was to learn from others, so I felt my conference time would be better served talking to those not participating over testing alone. So I stopped after 2 hours.

As a result, I was one tester who worked for 2 hours. The winning team was 2 testers for 4 hours for 8 man hours, and Miagi-Do had 6/7 testers for 4 hours for a total of 24/28 man hours. Big magnitude of difference if you ask me.

We all know that we can improve testing up to a certain point by adding time or people. I would think that future contests should consider taking this into account. Tell teams that they can use up to a certain amount of “man hours” as a cost. After that, their score will be impacted for going over budget. Let the games begin.

[James’ Reply: I don’t think man-hours is an interesting measure in this case. It’s not a productivity exercise. A single person might out-do a team, anyway. Each team or person decides how to tackle the problem.]