I am writing tools and collecting tools to implement a particular idea: augmented experiential testing (AET). I talk about this in my classes, but I want to say a little about it, here.

AET– which I realize will be misremembered as augmented exploratory testing, though that’s not such a terrible thing– means the application of software tools to enhance experiential testing. So, I guess I better define experiential testing: experiential testing means testing wherein your goal is to duplicate the experience of a real user whom you have in mind. A lot of what people call exploratory testing and manual testing is also experiential testing. But when we test we are always exploring to some extent, even when the testing is not experiential. I think the term “manual testing” is toxic so I prefer to say interactive or experiential testing.

The opposite of experiential testing is instrumented testing, which is when tools alter or attentuate the normal experience of using the product. For instance, if you automate the pushing of buttons checking of screens when testing, that is instrumented testing.

Augmented experiential testing happens when the tools you use are not changing the user experience in any way, but are helping you test. It’s like augmented reality for testers.

Examples of AUT tools include:

- Tools that record your test coverage.

- Tools that help you analyze or map the product as you test it.

- Tools that help you notice certain kinds of bugs that you might otherwise miss.

- Tools that help you take notes while testing.

- Tools that give you ideas for what or how to test in real-time.

I am currently a bit obsessed with AET. I have written a few tools for it:

- Web-testing bookmarklets that help me discover everything that is on a page and facilitate making coverage outlines.

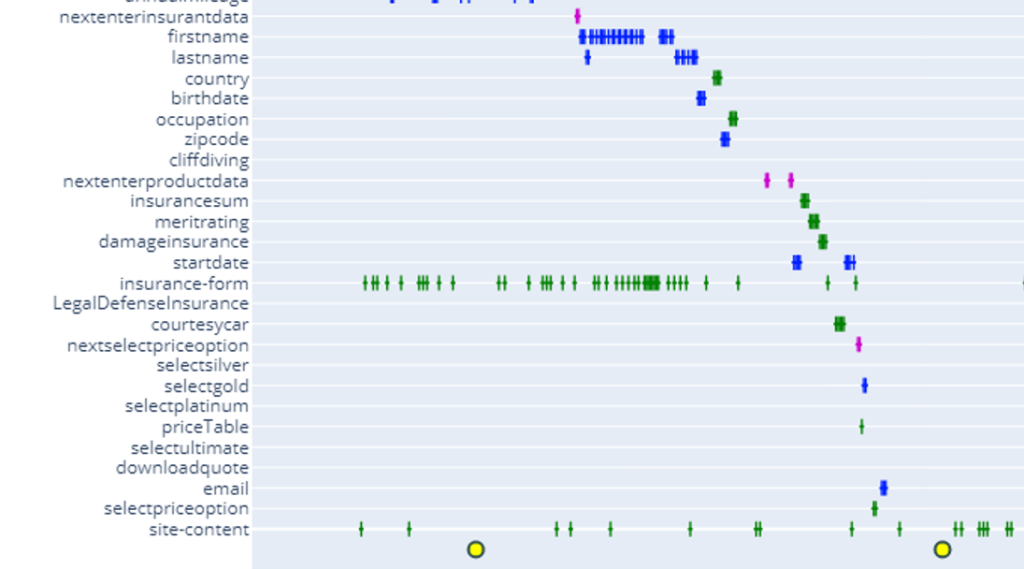

- A browser extension that records everything I do when I test, along with tools to visualize and analyze that.

- A bookmarklet that allows me to insert notes into those recordings.

- A monitoring tool that watches the Chrome debug lot and announces when there are errors.

- A tools that controls a programmable light that glows green when I test something new and red when I test something old.

Some of these are on Github, but some I only share with clients and people who take my classes.

(This is a time series visualization based on a recorded test session.)

>>>experiential testing means testing wherein your goal is to duplicate the experience of a real user whom you have in mind.

Shouln’t this be the goal of almost of all testing we do as testers? Interact, observe and infer with application as real user would do?

[James’ Reply: The answer has to be no. I may write a script that tries 10,000 variations of particular data. This script probably does not duplicate the experience of any user. But it does explore the data space of the software. Any automation that exercises functionality but does not involve a human naturalistically interacting with the product is instrumented testing, not experiential (unless you are doing API testing, in which case you would be duplicating the exerience of a programmer using the API).]

While some specific cases of testing might have different objective (than testing from end user perspective) such demo the the product to a potential user or identify security vulnerabilities in the product or evaluate performance of the product – most of testing aims to replicate user interactions and understand if user would be happy.

[James’ Reply: What do you mean by “most”? What is your unit of measure?]

All of sudden – why such renewed focus on real user centric testing ?

Running automated tests (using tools driving UI interactions) too (claim to) are real user behavior approximations… Aren’t they?

[James’ Reply: Simulating a user with a script is in no way experiential testing! Experiential testing does not mean duplicating a pattern of input, it means duplicating an experience. Only humans can have experiences. Machines merely collect data. An experience means you strive to feel what that user will feel and run into the same kinds of frustrations they will run into. This enables you to recognize bugs that you wouldn’t notice otherwise.]

Excellent stuff.. I agree that experiential testing is the way to go in many cases. One of the things that has frustrated me about having to use third-party (or outsourced) testers is the lack of insight into what is being done with the exploratory testing time that I’ve asked for.

At one point, after delivering a set of scripted “tests” to the third-party team (verifications and checks that have not been automated yet) I asked them to use the remaining hours doing exploratory testing. I was both shocked and bewildered when they came back with “hours left-over that can be carried over to next month.” Extra hours?? What happened during your exploratory testing???

So, the next month I created scenarios and projects that I wanted them to complete. Basically, I gave them an end result and asked them to accomplish that result (e.g. creating automated workflows, importing documents, etc.) without telling them how to do it. I also asked for some form of documentation as to how they accomplished the goal/scenario. I didn’t care what form this documentation took. It could be a step-by-step list (least desirable), a mind map describing their journey and insights along that journey, a video of the actual journey (ideal for evaluating how intuitive our software is), or any of a million other ways sharing how they accomplished the goal.

[James Reply: It sounds like you are independently inventing session-based test management. You are specifying charters instead of procedures and you are asking for session reports.]

The process is still being refined, but each iteration is giving more and more insight into not only the software, but the testing skills and thought processes of testers with whom I have no direct contact.

When I first encountered your writings (along with Michael Bolton) I was ecstatic to see/hear that other people had such similar ideas about how to properly test software. I was especially gratified to find words put to concepts that I’d held dear for so long.

Experiential Testing is a great term. I’m going to make sure to use it. Thanks!

[James’ Reply: Thanks for the comment.]

But there is more…

A test automation tools does not have interaction with a developer. It doesn´t have a discussion. When you test something as a software tester there is a lot of fuzz, misunderstanding, different opinions, insights, etc. A tool does NOT have that.

I´d advice you to incorporate that aspect far more then you currently do in your blogs!

[James’ Reply: I don’t understand your point. When you test with a tool there is also fuzz, misunderstandings, different opinions. None of that is lessened when you use tools.

Tools don’t DO testing. Only humans test. Tools can collect data– but then you have to interpret the data. Tools can perform certain actions– but then you can argue if those are useful actions.]

James,

What I mean is:

a test tool doesn’t argue with a colleague.

And we all know: Discussions can be energy consuming, even irritating, etc…

however there are also possitive effects.

A test tool on its own does not expierence that. Hence, can never be the same.

You could consider underlining that aspect even more in the Augmented Experiental Testing blog.

Is it more clear this way?

[James’ Reply: What difference does it make whether a test tool argues? An LLM may well argue, after a fashion, but let’s say it’s true that test tools don’t argue. So what?

Humans can do experiential testing. They can use tools to amplify, attenuate, or distort that experience. Arguing doesn’t enter into it.]

I think the observation here is that a tester can experience the software, and “argue” with the developer, advocating for better results/smoother flow/whatever while the automation can only say “this passed” (or didn’t) but doesn’t give the developer anything more than just the scenario isn’t “right.”

That leads to making sure the test script is testing the specific thing we think the user would do (a happy path if you will) but a quality tester can explore, and using the right tools, explain the logic they used to find the potential defect.

Fascinating approach! The concept of Augmented Experiential Testing (AET) truly redefines how we perceive interactive testing. By leveraging tools that enhance the tester’s experience without altering the user interaction, AET bridges the gap between traditional manual testing and modern automation. The real-time feedback and visualization tools mentioned, like the browser extension that records actions and the time-series visualizations, offer testers a deeper insight into their testing process. This methodology not only aids in identifying issues more efficiently but also enriches the tester’s understanding of the product’s behavior. It’s exciting to see how AET can transform the testing landscape by making the process more intuitive and aligned with actual user experiences.