(Thanks to Michael Bolton for structural editing and for helping me develop this concept, which grew out of our recent testopsy. Thanks to Frances White and Marius Francu, on the RST Slack forum, for their comments.)

To test well is to find the bugs that matter, assuming that those bugs exist (and we always, always, begin with that assumption). These bugs begin in the darkness. We bring them into the light. We do this by operating the product in all the right ways. I sometimes feel that the bugs are stuck in a box, and I am shaking that box; banging on it like someone who just lost a coin in a soda vending machine. Notice I said that I feel that. I certainly don’t think it, and I rarely say it, because it makes testing seem like a brute effort instead of a thoughtful design process that is worthy of smart people like us. (But yeah, it can feel like I am the gorilla in that famous luggage ad. Come out you buuuugs!!)

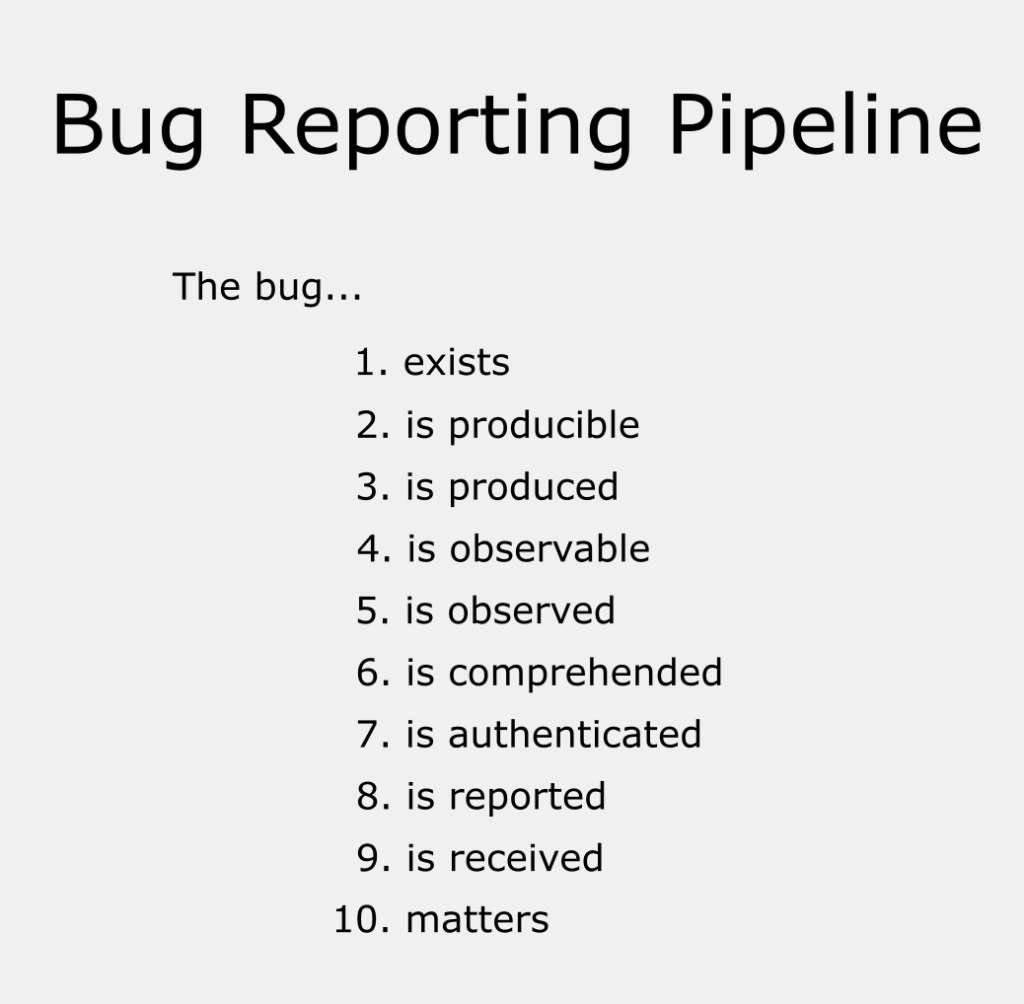

Let’s call that the “kinetic” model of the test process. There is truth to that model, because we can ask “why don’t the bugs just fall right out when we want them?” The answers to that are interesting and deep. The product, the tester, and the test process itself— all these things together— can be thought of as a sieve with different filters that can block the good bugs from being reported. Part of testing well is to keep those filters unclogged. But they are not just filters, they are also steps in a chain of evidence. When you report a bug you are reporting an unbroken chain of reasoning that spans “this is a problem that matters” back to “this exists.”

Assuming we’re talking to the developer, the logic of this process goes something like this:

You’re grateful to know about this bug, now that you’ve got it, because I reported it after determining that it is a genuine bug, following my investigation of it, starting when I noticed it soon after it appeared, having triggered it by a method I now know how to perform at will, which became possible only since this bug was left in the product by you.

That is one convoluted sentence, isn’t it? Bear with me. Since it is both a sieve and a chain, let’s call it something that evokes both ideas: a bug reporting pipeline. Let’s look closely at it and how it can go wrong:

To be correctly reported, a bug must exist.

To report an actual bug, the bug must first actually exist. By “bug” I mean something about the product that threatens its value in the opinion of someone who matters. That means the word “bug” is a broad term that encompasses faults, failures, annoyances, and even enhancement opportunities.

What does it mean to say that a bug exists, even if users haven’t seen it, yet, or never will? Start with the first principle: a bug is always a relationship between a product, people, and context. As people learn more about the product, that relationship becomes ever clearer, and they become happy with the product, or dissatisfied. Now imagine that all the people who matter were to have god-like, perfect knowledge of the product. Then, whatever they would agree is wrong with the product would be the “bugs that exist” at that point in time. That’s what I’m talking about. Of course, you can’t know for sure if any bug exists before you encounter it, but the existence of the bug doesn’t depend on your knowledge or what you see— it depends on the product itself, the people who matter, and the context in which they operate.

The work of testing is to explore the status of those things.

For a bug to ever affect anyone, it must be producible.

The bug must be able to “happen.” Producibility means that there must be some way of manifesting behavior that leads to the trouble that the bug will cause. In the case of a code fault, “producing the bug” means running the code in such a way as to trigger some sort of failure.

Developers and managers can get pretty tetchy about the producibility of bugs. Sometimes they will tell you not to report anything that you can’t reproduce on demand. Push back on that attitude! Maybe try this speech:

Of course, in any non-trivial situation I will try to provide steps to reproduce, but what if I try for a while and don’t find a way to reproduce that bug? And what if it turns out to be a very important bug? And what if, had you known about the one time that I saw it happen, you could have realized what the problem was even without reproducing it?

If I spend too much time trying to reproduce it, I might spend too little time in other testing. If I don’t report it at all, I’m not even giving you a chance to fix it. I think if such a bug gets released, you will not come back to me and say “we are suffering terrible embarrassment in the field, {insert your name here}, but don’t worry because you correctly followed our policy of only reporting obvious bugs.”

How about, instead, we say that reproducibility of a bug is desirable, but not essential? I propose: it shall be our policy that anyone who makes a good faith report of any trouble shall be heard, and not attacked. Bug reporters shall make a reasonable effort to report clearly, concisely, respectfully, and in enough detail to be of use to developers and management. It shall be our policy to welcome any such reports, whether or not they are reproducible (or even actual bugs), because it is better to have occasional bad reports than to create a hostile atmosphere that discourages even one good report.

(I realize this is too long to be an elevator speech, but remember that an elevator speech is mostly for people who don’t care about you.)

Can a bug exist and yet not be producible?

Yes. You can have a fault in code that is unreachable. This might still matter in the case that future development of that product activates that code. The only way to find bugs in unreachable code is through static analysis (meaning methods that do not involve running code).

Can a “bug” be producible and yet not exist?

Yes. Because you might see some behavior and think it’s a bug, then later realize that the behavior was actually correct.

For a bug to come to your attention during testing, it must be produced.

The bug must “happen.” In testing, as distinct from code review (or any form of static analysis), you must manifest the observable behavior of the bug. This you do through operating the product under the right conditions. Good testing is a process of systematically covering the various surfaces of the product (functions, data, platform configurations, interfaces, etc.) so as to maximize the chance of producing every bug that matters.

Can a bug be easily producible and yet not produced during testing?

Yes. You might be testing in a way that is quite different from the way real users use the product. What they see readily, you might never encounter in the test lab.

Can a “bug” be produced and yet not producible?

Yes. You might trigger a bug using input or a system configuration that will never be available in realistic use. Or you might not be testing the correct version of the product.

For a bug to come to your attention during testing, it must be observable.

You must be able to see it. Observability is a key part of testability. Detailed log files and access to back-end interfaces can greatly improve observability. Obscure interfaces and heaps of data stuck in JSONs reduce it.

Can a bug be produced and yet not be observable during testing?

Any bug that is produced is in principle observable, but it might not be practically observable to you given your tools and methods at the time it occurs. Perhaps the bug corrupts a database, but you have no means of checking the entire state of the database.

Can a “bug” be observable and yet not produced?

Possibly. Your eyes and memories are not perfect. You may fall prey to optical illusions or misfiled memories such that you believe you see something that later is proven never to have been present. Testers need to appreciate the frailty of human perception.

For a bug to come to your attention during testing, it must be observed.

You must actually see it. It is not a given that you will see a bug just because it can be seen. In my own experiences videoing and replaying testing, I have been shocked at how easily I miss bugs that were right in front of my eyes.

In the case of automation, you are obliged to be narrow and specific in your observations, because you have to write code for everything you want to observe. Humans are less reliable at making any given explicit observation, but far more able to notice unexpected things—things no one told you mattered, but that you realize are important as soon as you see them occur.

Can a bug be observable and yet not observed during testing?

Easily! One of the things that can reduce the chances of seeing a bug is the belief that there are probably no bugs to be found. It’s very important, as a tester, to convince yourself that bugs are likely to happen.

Another dangerous phenomenon is known as inattentional blindness. This happens when you are trying to pay attention to one particular factor (perhaps looking to see if the titles of the windows are correct), and then you mind automatically ignores other factors (perhaps some numbers that don’t add up) that may be obviously wrong. This is one reason why following a detailed test procedure can cause you to miss important bugs: script following tends to turn off your thinking.

To maximize what you observe you can test in pairs, perform tests more than once, video your testing so that you can play it back, don’t test when you are sleepy, or at the very least: be aware of how easily you can miss important bugs that you see only once.

Can a “bug” be observed and yet not observable?

Possibly. For the same reasons given above: your eyes and memories are not perfect. Also, the very mental state we need to be sensitive to bugs—the belief that they are likely—may also trick our minds into seeing something that is not there. For instance, as an arachnophobe, I have had the experience of seeing spiders out of the corner of my eye that turned out to be smudges on the wall, or a piece of fluff on the floor. Still, my brain would rather that I be too sensitive to spiders rather than not sensitive enough, and I agreed with my brain about that.

For you to make a compelling report, you have to comprehend the bug.

You must think you know what’s happening, to some reasonable degree. You don’t necessarily have to know all of what’s going on. But you strive to understand enough to be able to describe it, localize it, and know how to produce it at will.

Can a bug be observed yet not comprehended during testing?

Easily! You may see any given output, plain as day, and yet not understand why the output was produced, or how it could have been produced. Too many testers, too often, have only a shallow understanding of how their products work.

Can a “bug” be comprehended and yet not observed?

Yes. You might guess, or infer, that a bug exists just based on your knowledge of the design of the product, or on your memory of bugs in similar products. This is called risk-based test design. That comprehension may lead the way to producing the actual bug, or maybe you will find that the bug you feared does not actually exist.

For you to know that the behavior is actually a bug, you have to authenticate it using a good oracle.

You must have reason to believe that the behavior or attribute you are looking at is in fact wrong. Some things might look wrong at first and turn out to be right after further review. Some things might look wrong to you and yet be right in the minds of the people who matter. This is called the “oracle problem.” How do you know it’s a bug?

As a tester you are an agent for the people who matter. But it’s probably not economically viable to get all of those people to watch you test. So that means you must internalize how they think and what they want. Of course, you may have written requirements or some other detailed reference to work from, but often—for many kinds of technologies and for many kinds of bug—you won’t have definitive oracles. This can be an especially difficult problem to solve if you are automating, because you literally cannot encode all aspects in a which a product can misbehave, and you will drive yourself crazy trying.

The bottom line for bug reporting is you must be able to explain why the bug is a bug, and also why it’s a bug that matters.

Can a bug be comprehended and yet not authenticated?

Yes. The ordinary way this can happen is that the process of comprehending the “bug” taught you that it was correct behavior. What you must guard against is dropping a real bug because you are using weak oracles, or the wrong oracles.

Can a “bug” be authenticated and yet not comprehended?

Yes. If you misunderstand or mis-characterize some output, it is easy to see how you might think it’s a bug. For instance, if a certain report is only created in “subscription plan mode” and you see that it is produce in “basic plan mode” that would be a bug—unless it was in fact in basic plan mode and you had gotten your browser tabs confused.

For your client to find out about the bug, you must report it.

You have to tell the story of the bug. There are many ways of reporting bugs, from formal (a Jira ticket with steps to reproduce) to extremely informal (“hey look at this!”). In your reporting you decide that you have the right level of knowledge, what forum in which to offer the report, what form it should take, what sort of supporting material is needed, etc.

Can a bug be authenticated and yet not reported?

Too often! This can happen if you feel intimidated by an angry development team or managers. Maybe it’s very late in the project and you don’t want to make them upset. It can also happen if the bugs are being found so quickly that you lose track of some of them. We call this the “money booth” syndrome.

Can a “bug” be reported and yet not authenticated?

Yes. This can happen if there is a disagreement about the oracles but you elect to report anyway on the chance that it matters. Or it can happen if people who aren’t committed testers are looking at the raw results that come out of automated checks. What often happens in this latter case is that management shrugs and says “oh these are probably just glitches in the automation.” (No project I’ve seen works by letting machinery “report bugs” without the intervention of human judgment, unless it’s something slam dunk obvious, like a crash or safety violation. Even then it’s not really a bug report, it’s a trouble alarm that hasn’t yet been investigated. The value that human authentication brings— when done properly— is that you believe a report you didn’t write is definitely worth your time to read.)

For your client to appreciate the bug, they must receive the report.

Your client must “get” it. Your reports must land with them. This is a social and cognitive problem, and I’m afraid it has a lot to do with your personal credibility. You must build that credibility and protect it. That process of building credibility can take months. Destroying your credibility doesn’t take long at all.

The reporting usually begins before there’s a formal report, in the sense that you discuss your findings with the developer before you go to all the trouble of writing them up.

When a team member says to me “we don’t need testers, because we can get feedback directly from real users” I ask them “Have you ever seen feedback from real users?” Because you know— if you have seen comments about quality written by frustrated outsiders who are not being paid to get along with you— that it can be quite difficult to read and process those messages. As a tester, I enjoy reading it and find it useful. Most developers have little patience for it.

Can a bug be reported and yet not received?

Yes, especially if there are a lot of reports, or if they come in at the eleventh hour. They can overwhelm developers and management. This is why you must organize the reports well, categorize them, and especially give each report a short and compelling title. You often need to follow up to make sure it doesn’t get lost in someone’s inbox.

Can a “bug” be received and yet not reported?

Possibly. This can happen when developers or management watch users in action, and see directly the frustration they experience. That’s one reason why getting groups of users to experience the product in the presence of the product team can be such a powerful experience. When I do that, I don’t ask those users to write any reports, I have specialists do that. But in that case the formal reports are just icing on the “cake” of direct observation by the people who matter.

For your client to do anything about the bug, they must think it matters.

We want to find bugs that matter. If you report bugs that don’t matter, testing will not be seen as an important process. You will not feel valued. Now, of course, you don’t control whether there are important bugs in the product. That is entirely outside of your scope. The developers create the bugs, or else the customers do, with their changing wants and needs. But there are probably good bugs in your product. In any case, you should have faith that there are.

If you test in a risk-based way, you will orient yourself to find the bugs that, if found, probably will matter.

Can a bug be received and yet not matter?

Yes. Lots of things can be bugs in a technical sense but don’t really impact the user. These can range from cosmetic problems, to minor performance problems, to behaviors that simply were not exactly what was in the original spec.

Can a bug matter and yet not be received?

Yes. In other words, a bug may do damage to your company or your customers without you becoming aware of it.

Alas, bugs frequently get into the field, in our industry. DevOps keeps wanting to push software out and feel that the testing process is slowing them down, perhaps assuming that no problem can be all that bad. Agilists preach that “everyone should test” (which means, at best, that everyone tests poorly and no one really takes responsibility for it). Technologists preach that “all testing should be automated”, which means that no testing is automated (because testing is not a thing that machines can do) and what does get automated is a smattering of narrow output checks that look like testing to people who don’t care about testing. So, of course, many bugs get in to the field. But whether they really matter is a question for your industry, your customers, and your level of risk acceptance.

For people who take testing seriously, we want to find every bug that could matter. And when we fail, which we often do, we learn from that and improve ourselves— the way automation can’t and amateur testers won’t.

Very nice analysis. It gives lot to think about. Perhaps another 1 option to add is Bug was found, authenticated, accepted as valid from development team but was not fixed because the effort to fix it surpass the benefits. Example : a very high development effort such a change the whole product architecture in order to fix the bug. It makes it not worth it sometimes.

[James’ Reply: To some degree I’ve rolled that into the notion of “matters.” If the bug matters enough, it will get fixed. But more to the point of this article– this is the bug reporting pipeline, not the bug fixing pipeline. My concern as a tester is really not whether they fix bugs, but rather whether they feel I have given them a high-quality bug list to work with. Making a reasonable decision not to fix a bug isn’t a leak or clog in this pipeline.]

Thanks for the post James, it reminds me of some of the work of Douglas Hoffman around “The anatomy of a successful test” and is an interesting lens through which to view Testability and Operability.

Thank you for such a deep analysis !

Thanks for clarification James. Indeed I was under the impression you included the bug fixing pipeline. Seems this is not the case.

[James’ Reply: That would be a much bigger pipeline, but well worth writing about for someone who specializes in creating quality.]

Regarding reporting on bugs that you can’t reproduce:

If you report such a bug, what do you expect will happen? That the developer will try to reproduce it? As the tester you should know how the system works and if you can’t figure out how to reproduce it, you can be pretty certain that the developer won’t spend much time and effort trying.

I would possibly do an informal “report”, i.e. “Hello developer, I saw this thing and I have no idea what caused it. Any idea?” Maybe pairing and trying one more time to reproduce, but that’s it.

Any bug report raised in any bug tracking system would be closed as “Cannot reproduce” and valuable time would be wasted.

[James’ Reply: I will reply to you as a developer (which I was before I was a tester) and as a manager (which I was for ten years in Silicon Valley). So, pay attention:

(as developer) “Please report anything that, in your judgment, could be a big problem. Even if I disagree with you, I will thank you for reporting it. If even one out of ten of those reports turn out to be a major problem, you will have saved me a lot of trouble and embarrassment. You might think you are wasting my time, but here’s the thing: I know more about how this technology works than you do. Something that, to you, is strange, might not be strange to me. But maybe I also won’t understand what it is or how to reproduce it. In that case it’s still good for us to have a record of it in the system. I have a lot of experience with bad bugs that looked like small bugs when we first encountered them. Trust me. If you see something, say something.”

(as manager) “Please report anything that, in your judgment, could be a big problem. As a tester, it’s your job to be a good filter, but your ability to produce a bug is not a good reason to filter it out, unless you are pretty sure it would only be a small problem even if you could repro it. As a manager, I appreciate that you filter what you see, but don’t try to do my job for me. I’m in charge. I’m the one who decides whether the risks we are taking are justified. I can’t do that if I am not informed about the potential problems with our product. A bug that you saw one time is a bug that probably happened and probably could happen again. So, I’d like you to maintain a mystery bug list (like the one they used to keep in the Borland C++ team after they shipped a hard to reproduce bug that forced a major recall). The mystery bug list is a list of things you can’t reproduce. We should talk about this list at each project meeting.”

]

I’ve never met a developer like you. A good developer will pair for 30 minutes trying to reproduce the bug, a more old-school response is “my code is perfect unless you can prove otherwise”. No-one has ever asked me to file a bug report for something that cannot be reproduced. And I still don’t see the point other than as an ass-covering exercise so that when the bug is found in production you can say “I told you so”. Which doesn’t help in any way to fix the bug.

[James’ Reply: I got to be the way I am because I’ve seen a lot of failure. Some of the failure I’ve seen have been on my own projects. A lot of it has been from visiting other peoples’ projects (I have visited maybe 200 companies on a consulting basis, which might be more than you have). This has given me a keen sense of how easy it is for a bad bug to hide from me. This is no mere CYA exercise, but rather what it means to take your job seriously and be a responsible engineer. At a certain young age that wasn’t my goal. At this time in my life, though, my paycheck is not much of a motivator. I am motivated mostly by self-respect.]

I have had managers like that, who says “report everything”, then when it’s getting close to a release there is a mad rush to tidy up the reports to make everything “green”. Part of that clean-up is to close anything that can’t be reproduced.

As a manager, what value is it to you if I say “there is a risk we could have a big problem, but I don’t know what the risk is or how big it is”?

[James’ Reply: There is no value in saying that at all– if that’s all you are really saying. But you have left out the details! In real life, you tell me WHAT you saw and WHY you are concerned about it. Then I decide, as a manager, what I want to do about it. What I might do is delay release for a week have the developers instrument the code and work with the testers to do a comprehensive sweep of a certain functionality to get to the bottom of a data corruption bug. That’s what we did at SmartPatents, when I worked there.

A huge client of mine told me a story of how they did NOT do that with one such bug and ended up shipping a product that systematically deleted ALL customer data, resulting in massive costs to make it right. The bug was found TWICE during testing, but each time management thought it was just an artifact of the test environment. Now they are more careful.

At Borland, years ago, management put up signs offering a cash bonus for anyone who could find a reproducible case of a certain project file corruption bug.

At HP, in the LaserJet firmware team, I saw a prototype printer bristling with diagnostic equipment, where they had been doing weeks of testing just to catch one strange problem.

Management gets to decide what to do. Maybe you have only worked in shops where the stakes are low. I recently taught at Volvo, though, where I was deeply impressed with the attitude I saw about security and safety testing.

But I hear you! There are many companies that don’t take quality very seriously. Those are probably companies with leaders who have not yet suffered the consequences of sloppy engineering.]

I have once seen a bug that represented itself one time.

Many people said that was impossible. Still I told them I know what I´ve seen, and they kept saying: that is impossible.

Then we had a Demo (Sprint Review) and this time it was for a large crowd in a big auditorium. When I presented the new functionalities we had accomplished that Sprint suddenly the bug appeared. “It flashed by once again”, but now I was not the only person (in this case tester) anymore who was saying… I’ve seen it I’m sure of it.

Hi James,

Really good and comprehensive post!

It also made me think about the preparation you should do as a tester before this analysis.

What do you have to do in order to be able to understand that it is a bug, i.e. something that threatens the value?

In my early years as a tester I think that most of my reported bugs were those triggered by faults in the code (crashes, script errors, etc). Easy to observe, yet they might have been tricky to provoke.

However, the more senior I have become as a tester, I believe that more of my bugs found are dealing with cases where I use my understanding of “what values” and “who matters”. (My take on HTSM really helps me in understanding this…)

Having said that, it might be even harder to report the bug and anchor it with certain stakeholders. Often because they haven’t done the analysis themselves so it demands even more of me in order to explain what values that are threatened, for whom, and why they should matter.

Cheers,

Henrik

[James’ Reply: Quite right. This model is meant to highlight that problem. It doesn’t do much to solve it, though. That’s a job for a different model, such as one that focuses on tester skills, or testability, or risk analysis.]

I really like this post, it remembers me to my 6 years manual testing time. I did testing without any knowledge of testing , no coaching, no reading…no no. just I have the product and product’s help that is prepared for the users of the doc. I wish I got this kind of resources at that time.

It is really helpful for every testers.

>>> Can a bug be observable and yet not observed during testing?

one more reason we missing observing bugs is our lens ( a collective term for combined sensory faculties – not just eye). Testers do not “straight” away “observe” bugs – they go in “hunt” for them.

When you hunt for “something” in the wild – you almost all case will have an “image” or “model” of how the stuff that you are hunting. So this in a way limits what you can “see” and what you ignore.

Let us say I am looking for a blue pen (blue of certain shade and shape). My mental process would be keep looking for a “that” image of pen. What if you have a wrong image (or any of its details) ? you will miss it even if it is in front of your eyes.

How to make bug observable – or how to make bug “show” up on its own? Let say bug disguises as a soldier wearing a particular uniform and mixes up with other “real” soldiers. But being fake – the uniform of the fake soldier – sheds its color when water is sprayed on it.

In this case – spraying water on a group of soldiers to spot hiding bug will be a technique to separate bug from rest.

thinking purely from bug’s perspective – how can we (programmers) dress up or tag bug if and when such situation happens. Then testers can “spray” water to pull out the fake guy !!!