I didn’t coin the term exploratory testing. Cem Kaner did that in the 80’s . He was inspired by “exploratory data analysis,” which is a term coined by John Tukey . In the late 80’s and early 90’s I was noticing that testers who did not follow any explicit script were better at finding bugs than those who did. I decided to find out why. That led to my first conference talk called The Persistence of Ad Hoc Testing, in 1993. Soon afterward I encountered Cem’s terminology and began saying exploratory testing. At the time, Cem had left the industry to become a lawyer, so I was the literally the only testing guy speaking at conferences about exploratory testing. (If you were speaking or writing publicly about ET at that time, even by another name, please contact me so I can correct this claim.)

In early 1995 I met Cem, who was coming back to testing. We joined forces. In the years that followed, we successfully popularized the idea of exploratory testing. In 1996, I designed the first class explicitly devoted to ET. This became the core of the Rapid Software Testing methodology. I also created the definition of exploratory testing that the ISTQB partially plagiarized and partially vandalized in their syllabus. In other words, this is my area of special expertise. Those of us really passionate about studying this have evolved our understanding over time. I will tell you where my own education and analysis has taken me, over the last 30 years or so.

The Exploratory Nature of Software Testing

I will begin by summarizing the basic concepts that comprise the essence of software testing practice. In doing so, I aim to convince you that testing is an exploratory process. It’s not just sometimes exploratory; it is inherently exploratory. In other words, whatever else you may be doing when testing at a professional level, you are also actively learning and making new choices about what to do next based on what you learn. You are never merely following a pre-established procedure. But while that means the phrase “exploratory testing” is largely redundant, some testing is especially exploratory (i.e. informal, decided moment by moment by the tester), while some testing is especially scripted (i.e. formal, determined by someone else or at some earlier time). The testing process is always some mix of the two approaches. To do testing well, we need to know how/when/why to emphasize choice and how/when/why to emphasize procedure.

Testing is not A science; testing IS science.To understand the nature of the testing process, we should start with science. Imagine a science teacher in grammar school who does a chemistry demonstration. First, the teacher describes the setup and the equipment. Then he explains the chemicals and describes the reaction that will occur. He mixes two transparent liquids together, and you see the resulting solution slowly turns dark blue. This is commonly called a “science experiment,” but only by non-scientists. The teacher knew exactly what would happen; therefore, it is more properly called a demonstration .

The Oxford-English dictionary defines an experiment as “a scientific procedure undertaken to make a discovery, test a hypothesis, or demonstrate a known fact.” So, the science teacher has technically performed an experiment, but not one that any real scientist cares about. Why not? Because scientists are in the business of learning new things. What professional scientists call an experiment is a systematic process of investigating some phenomenon; specifically, by controlling a system in various ways while methodically observing its behavior in relation to that control. Such investigating is only necessary in a situation where there is something you don’t already know about the phenomenon or system in question.

An experiment can be highly controlled or lightly controlled; more formal or less formal. But one thing is true about a real-life experiment: it is uncertain. Any scientist who knows for sure what will happen in an experiment is merely performing a demonstration; not learning. The learning that scientists do is disruptive to their work; and they love that. The people who run the Large Hadron Collider would like nothing more than to invalidate the Standard Model of quantum physics by learning something unexpected that would require new theories and new experimental designs. But there is a major difference between a physicist and a software tester— the things we testers experiment upon are far less known and far less stable. No physicist worries about needing to “regression test” physical laws because they might have been changed the previous night. Yet, that sort of thing does happen to testers, so our learning curve never flattens out, and our test designs must be allowed to change.

When people do real experiments, even rather formalized ones, unexpected events can occur and unexpected data may be obtained. Scientists use reason, insight, and curiosity to react to that new information . This is called exploration . There are grand examples of scientific exploration, such as building a probe to chase a comet. But even in small everyday experiments, scientists scratch their heads over the behavior of two bats hunting together , or wonder why a bumblebee suddenly charges off in a random direction while collecting nectar . They hypothesize and construct new protocols to observe these phenomena. That is exploration, too.

Software testing is exactly like that. Software tests are not just similar to scientific experiments that test hypotheses or discover new things, they are experiments (the word “test” is right there in the definition of experiment). Software tests are experiments and the professional tester is a kind of scientist who studies the product under test. If you want to learn how to test very well, study the design of experiments .

Exploratory testing means performing tests while learning things that may influence the testing. This is a scientific process.

Formality vs. Informality

Formality is a major issue in both science and software testing. It means “having a conventionally recognized form, structure, or set of rules.” A formal situation is one that is dictated by choices of some other people or by you from some previous time. Formality is followed; it is adhered to. Conversely, informality means something not constrained to any particular form, structure, or set of rules– in other words, the experimenter is free to choose here and now. Formality is important because it allows us to make strong statements about what we have and have not done; what we have and have not proven. Formality makes mathematics possible. Formality facilitates reliable, dependable results. It also makes certain kinds of collaboration possible, whereby the work of many people must remain consistent over time and space.

But formality has a cost. Setting up a formal arrangement of any kind means making design decisions that include some things and exclude other things. Fixing upon a good solution, now, may mean giving up a better solution, later. As an example, IPv4 protocol is so widely adopted that the switch to IPv6 has been painfully slow. Twenty years after the IPv6 draft standard was settled, more than 90% of traffic on the Internet is still using the older standard. IPv4 is an example of both the incredible value of standardization and the incredible difficulties standardization may impose.

Informality is important because it allows new information and new ideas, even ones that are inconsistent with all that have come before, to inform our work. Informality allows us to benefit from tacit knowledge, which is knowledge that is unspoken and unwritten and therefore invisible and unjustified within any formal framework. Informality is often associated with spontaneity, too. Play is informal, by definition. Yet play is vital for creative problem-solving . Apart from helping us discover new solutions, playfulness gives us motivation. It liberates energy we need to do difficult things.

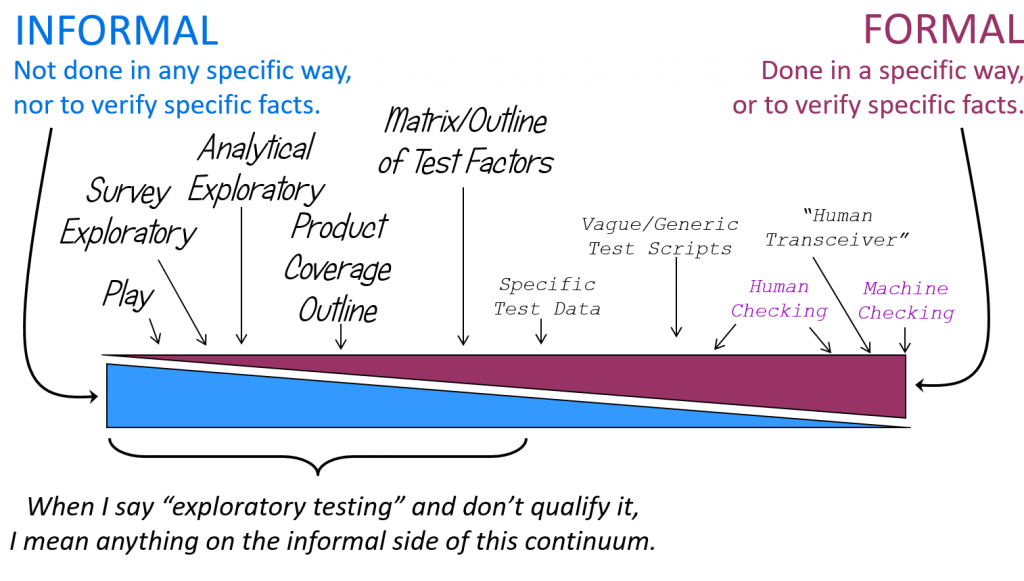

The formality continuum, above, makes the point that no testing is completely exploratory, nor completely scripted. It’s a mix. Good testers learn to control the mix. In RST, we don’t necessarily use the term exploratory testing, but if testing is very informal, calling is exploratory can be okay; just as if it is very formalized, calling it scripted is reasonable. In general, I think it is more helpful to ask in what way any given testing is exploratory and in what way that same testing is scripted– then ask if that mix makes sense.

All testing is exploratory, at least to some degree, and exploration is the natural mode of testing.

Agency vs. Algorithm

Formality is tied up with another key idea: agency. Agency is about choices; who can make them; who is accountable for them. A test tube sitting in a laboratory has no agency. Even in the most formalized and mechanized of experiments, which minimize human agency, we would never say that a test tube is “doing science.” We would not say that a thermal probe is “experimenting” on the liquid it measures. There are robots on Mars right now, but no one would call them “automated scientists.” They are tools.

Science is always done by people, usually with the aid of tools, and the minds of good scientists are ever curious and not robotic or algorithmic. Algorithmic processes are immensely useful, of course. But algorithms are pure processes without agency. Any “free” choices are made not by algorithms but by designers of the algorithm . And there is no algorithm for testing itself. Algorithms are applied in testing, but always by people. The people who apply them must be accountable for them. The way we know for sure that machines don’t have agency is by looking at what happens when machines misbehave: they are not punished. Machines don’t get sued and don’t go to jail. Machines are, at best, like children. If they misbehave, the blame falls upon the nearest source of agency: their creators or operators.

Exploratory testing is testing by someone who possesses agency and is therefore accountable for that work.

Deliberation vs. Spontaneity

Some testing is spontaneous, in that it emerges in the moment. Other testing is deliberative, in that it is carefully planned in advance. This is not the same as informal vs. formal. Spontaneous testing can be formal or informal; deliberative testing can be formal or informal. Exploratory testing is commonly associated with spontaneous testing, because that’s an easy case to talk about: you step up to a product and “pound” on the keyboard. Type anything that comes to mind. Click on whatever you feel like clicking. React to whatever happens. But it is equally exploratory to sit and think about what would be useful to do next, and to think through the reasons why it would be useful. That’s deliberation.

Now, if you plan multiple steps that you want to take, and carefully stick to that plan, then that would be a scripted test (formalized). If you plan those steps and don’t force yourself to stick to them, that is less scripted (more informal). If you have some deep-seated habit, or if, by not being aware of your options, you always make the same choices, then your “spontaneity” can be highly formal. For instance, imagine only knowing about one food: pizza. When asked what you want to have for dinner, you always say “pizza.” Well that might feel spontaneous, but it is actually pre-determined. You might as well have a written contract compelling you to say pizza. Or perhaps you play the piano, and you practice a piece of music so much that you eventually can play it in a flowing spontaneous way, without reading any musical notation. Finally, we all have little phrases we use that are formalized and yet uttered spontaneously, such as “thank you” or “how are you” or “I am fine.”

Exploratory testing can be spontaneous, or highly deliberative.

Structured vs. Unstructured

Exploratory testing is often mistaken for “unstructured” testing. This is not only wrong, it’s often used as a subtle insult, as if exploratory testing isn’t quite grown up and professional. The way that structure is defined in the dictionary does not help much. The Oxford English Dictionary defines it as “the arrangement of and relations between the parts or elements of something complex.” It seems to me that the word “complex” is not doing any important work there and can be ignored. People speak of the structure of simple things, not just of complex things. Furthermore, when we use the word structure we are always referring to something that holds true over some period of time and/or some space. For instance, the structure of a building does not include the placement of chairs and tables within the building, or where people happen to be standing within the building. Those things may be part of some structure, but not the structure of the building, as such.

Therefore, I suggest this definition of structure for technical systems and technical work: a pattern that persists.

By this definition, all testing is structured, except completely random testing. (Randomness, by definition, has no structure, because no relationship among, say, the value of sequence of random numbers can be counted upon to persist as the sequence continues.) The reason some people want to say that exploratory testing is unstructured may be that they don’t understand its structure. They may assume, without evidence, that an exploratory process, like a random process, involves nothing that can be depended upon; no pattern that persists. But exploratory testing may have many kinds of structure. It may be structured by goals, tools, skills, processes of learning, time boxes, theories of error, and the elements of the product itself . Any and all of these things shape the testing over time, even though the tester makes new choices about many other things.

Perhaps a more likely reason that exploratory testing is called unstructured is that outsiders may not perceive any commitment to a particular structure. But that’s really about trust, competence, and culture, all of which are manageable without forcing testers to stop using their minds and exercising their technical discretion. After all, we don’t ordinarily demand that a taxi driver show us his “plan” for driving, because our culture encourages us to trust in the intentions and competence of taxi drivers. When this trust is not present, as I have experienced in a couple of countries, then I actually will discuss the structures of an upcoming taxi ride before I get into the car.

Let’s advocate for a sense of structuredness that does not give special privilege to ideas just because they are put into words. In fact, it is unspoken, unwritten structures that we probably most need to become aware of.

Exploratory testing is structured. Testers control its structure.

Tacit vs. Explicit

The primary reason that some people have such trouble understanding the structure and processes of exploratory testing is that much of how we work in testing is not made explicit. Instead it is tacit . Explicit knowledge is any knowledge that is represented in a form that can be reduced to a string of bits. Anything spoken, written, or pictured is explicit. There is also knowledge in our minds that is not explicit, but could be made explicit if we tried hard enough (e.g. writing down everything you know about France). Written scripts are explicit knowledge, of course. However, there are also a great many tacit scripts by which we humans live, so don’t fall into the trap of thinking that all formal testing is written down in words. In addition to explicit knowledge, we have knowledge that is inherently tacit. That means knowledge not put into a form that can be reduced to a string of bits. This includes knowledge built into our minds at a low level (e.g. “I feel surprised when I see flashing colors”), knowledge that we may not consciously be aware of (e.g. “I didn’t realize that I believed a window should not flash strange colors when I scroll it until the moment the product started doing that.”), as well as knowledge we develop in the moment in response to social situations (e.g. “Management was annoyed by my bug report about flashing colors, because they said it is ‘merely cosmetic’, but I can edit it to make them see how important it is.”)

The study of expertise is still relatively young. Even so, sociologists, anthropologists, psychologists, and other scientists who study human behavior have developed elaborate protocols to identify tacit knowledge. They do not allow themselves to assume that the processes and knowledge that a person puts into words represent the processes and knowledge by which that person actually works .

Unfortunately for our understanding of ourselves, few computer scientists are also sociologists. The people who run software projects, or write testing textbooks, are rarely conversant with the protocols and discipline of social research. It might even be said that computer scientists and software testers are not competent to say how they themselves do their own work! They are experts in talking about software, but not necessarily in identifying the thought processes by which they think about software. This has led to a huge emphasis on algorithmic accounts of software testing. In other words, formal testing gets all the attention for the simple reason that it’s the only thing textbook authors know. In the language of social science, formal testing is more legible than informal testing .

How can you avoid this pitfall? Easy: pay attention. To what? To testers!

You must watch testers work and you must watch yourself work. Learn to respect that there is a deep structure even to such seemingly trivial tasks such as deciding when to interrupt a test process and when to stick to it. Tacit knowledge is developed not by reading and memorizing instructions. It is developed through the internal theorizing you do when you watch someone else work or when you engage in an interactive conversation. It is a product of the innate model-building that happens in your mind when you struggle to solve a problem, such as how to make a program produce a certain output. In other words, tacit knowledge is founded on the experience of living and working and socializing in a stimulating world.

As you test, you develop and use a mental model of the product under test. You can make formal models, too, but no formal model will capture the full extent of your mental model. Your mental model includes not only details of what the product is, but also how it works, what its purposes and uses might be, what it influences and connects to, its past and future, its similarities and relationships to other products, patterns of consistency and inconsistency within it, its potential problems and prospects for improvement, etc. Your mental model is automatically created and maintained through the processes of interacting with the product and reflecting on what you know. This knowledge is then crucial to your ability to evaluate the product and report on the status of your testing.

Part of how we mediate the development of new knowledge about complex systems is through the processes of curiosity and play. The spontaneous as well as deliberative ideas for new interactions with a product occur in response to our internal explorations of our emerging understanding of that very product. Therefore, we cannot expect to formalize the processes of this learning, since we can predict or control those moments of curiosity. Exploration is crucial to learning about anything complicated.

Exploratory testing is not only reliant on tacit knowledge, it is a process for developing tacit knowledge about a product that in turn allows you to test it better.

Exploration is unpredictable, yet responsible and controllable

I once was driving on a dark road when a deer came charging across the road on a direct collision course with my car. I had about two seconds to react, so instead of hitting the brake, I swerved away from the animal, just enough so that I hoped he would bounce off the side of my vehicle rather than hitting me head-on. My two right wheels went into a ditch and my chassis scraped along the ground. But I was still going 40 miles an hour, so with that momentum I yanked hard to the left and luckily popped quickly back onto the road. By that time the deer had disappeared, having just missed me. It felt like I had just performed a movie stunt.

When you drive a car on a public road you can’t know what is around the next turn. You can’t know what other drivers will do. Yes, you can make some good guesses, and mostly your guesses will be right. But no matter what, you must stay alert and try to be ready to react to the unexpected. In other words, driving a car is an exploratory process.

Exploratory processes are not entirely predictable. In fact, they may be quite unpredictable. When managers say that they want a more predictable test process, I tell them that there are two ways to make testing entirely predictable: have the developers tell me all the bugs before I start testing (they never can), or stop caring whether we find bugs (good managers never stop caring).

Exploration may take different forms at different times in the project. When you first encounter a product and you don’t know much about it, you explore to learn what it can be. In RST, we call this “survey testing,” which means any testing that has the primary goal of building a mental model of the product (i.e. learning about it). Although its primary goal is learning, it is real testing, because you may encounter and report bugs while doing it. Survey testing is a very exploratory process.

You can also explore before you ever see a physical product. You could do this by reading about it, reviewing similar products, or having conversations with the developers and designers. You can ask questions , either out loud or silently as you perform research . This helps you build that all important understanding of what you will eventually test in physical form, and might also lead to finding bugs in the specifications and ideas that you encounter.

Early in the test process, specific ideas about how to test the product will emerge. Resist the urge to write down those ideas as formalized “test cases.” It’s too early for that. The worst time to formalize testing is at the beginning of a project. Planning–getting a general idea of what you want to do, and even writing down those ideas–is not the problem. The problem is making premature and binding decisions. Formalization is premature unless you have already informally experimented with possible test procedures and gained tacit knowledge from that process. You will learn a great deal very quickly when you are actually in front of the product. But if you have already formalized the testing you will either have to throw away most of that scripting and start again, or else ignore what you learn and follow procedures that you now know to be bad.

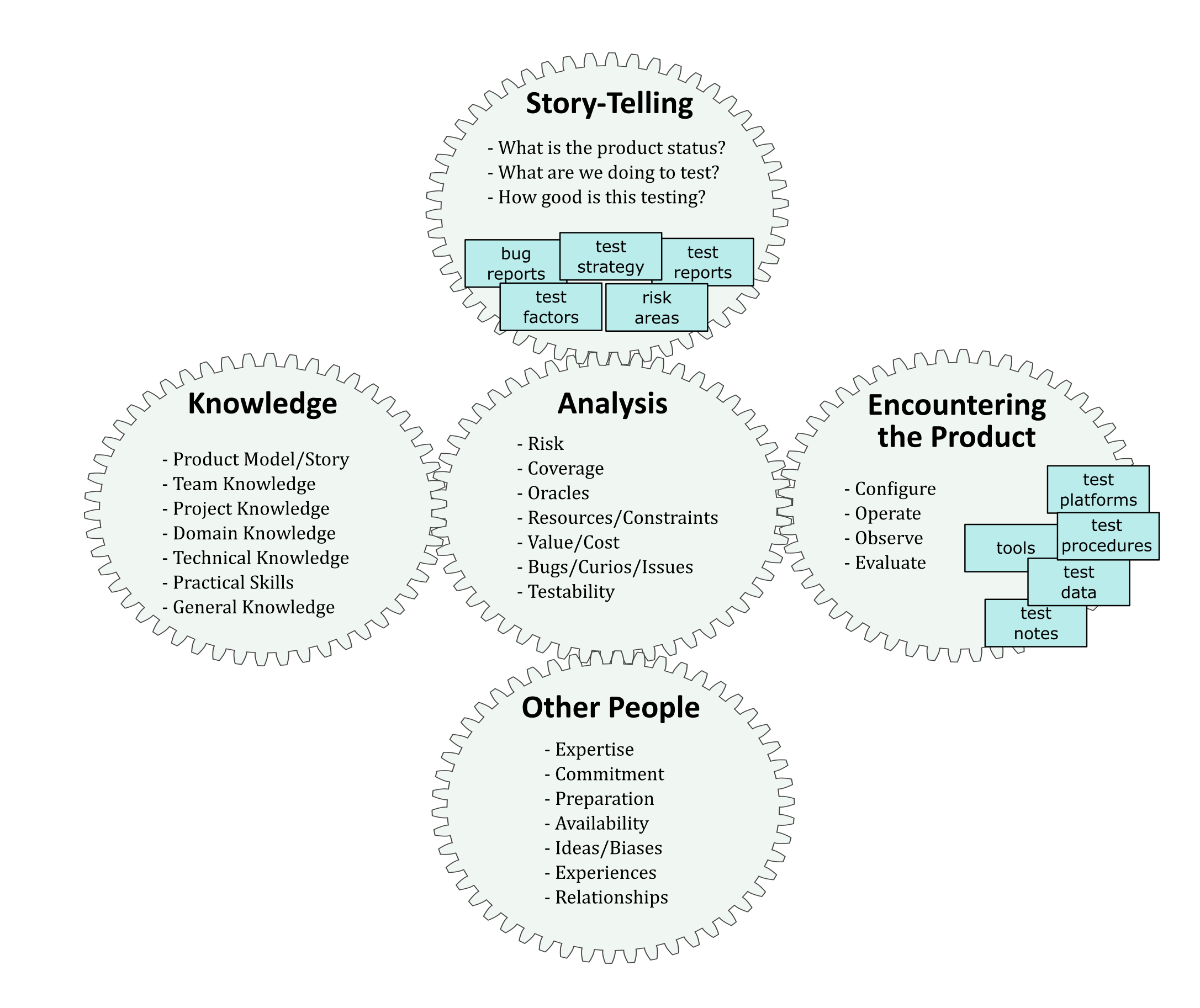

Does this sound too general? Then let’s get into details. Consider this diagram of the testing process, which lists many details:

We call this the “gears” diagram. You see five kinds of basic things here: analysis, story-telling, encountering the product, other people, and knowledge. Each of these elements affects the others, which is why they are shaped like gears. Each of these elements evolves over time, and that evolution is an exploratory process. It is not pre-ordained. The cyan-colored rectangles are artifacts that come into existence and get refined or reworked as we test.

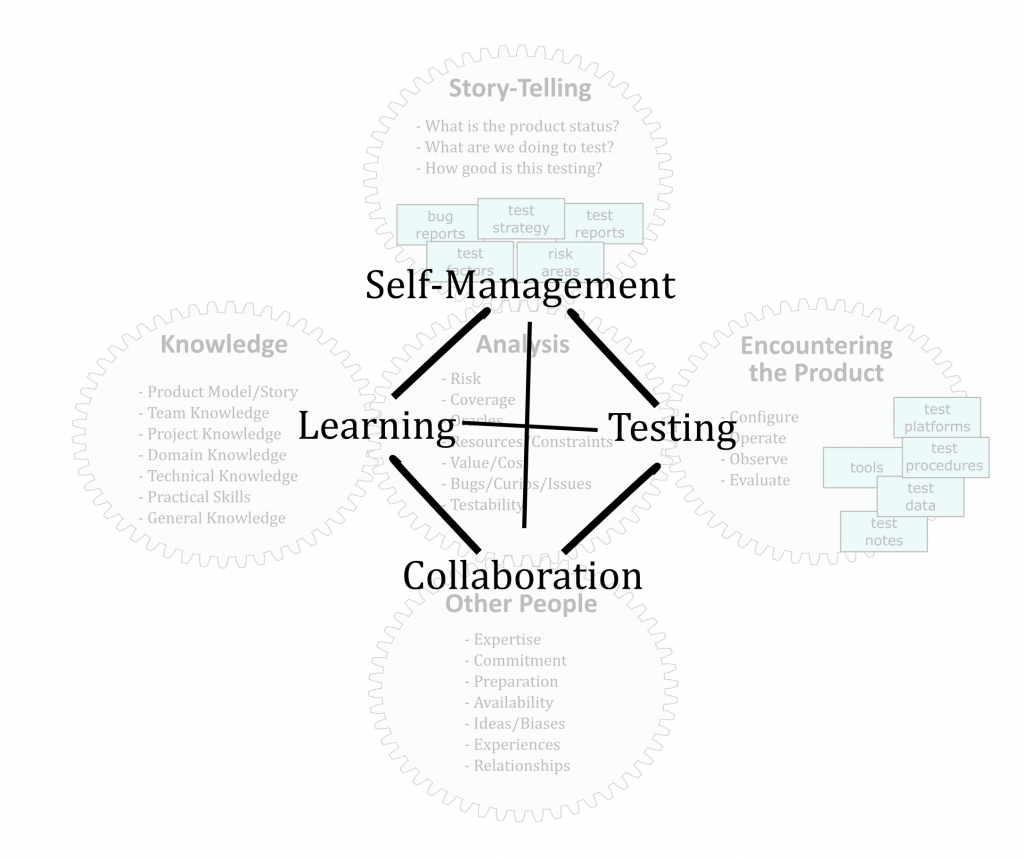

Where each pair of gears engage we can identify a special process. For instance, where analysis meets knowledge, that’s learning. The interacting of analysis with story-telling is a process of self-management. Exploration is, by definition, a self-managed. How we go about that is by continuous consideration of the story of our work, which is the product of analysis and drives future analysis.

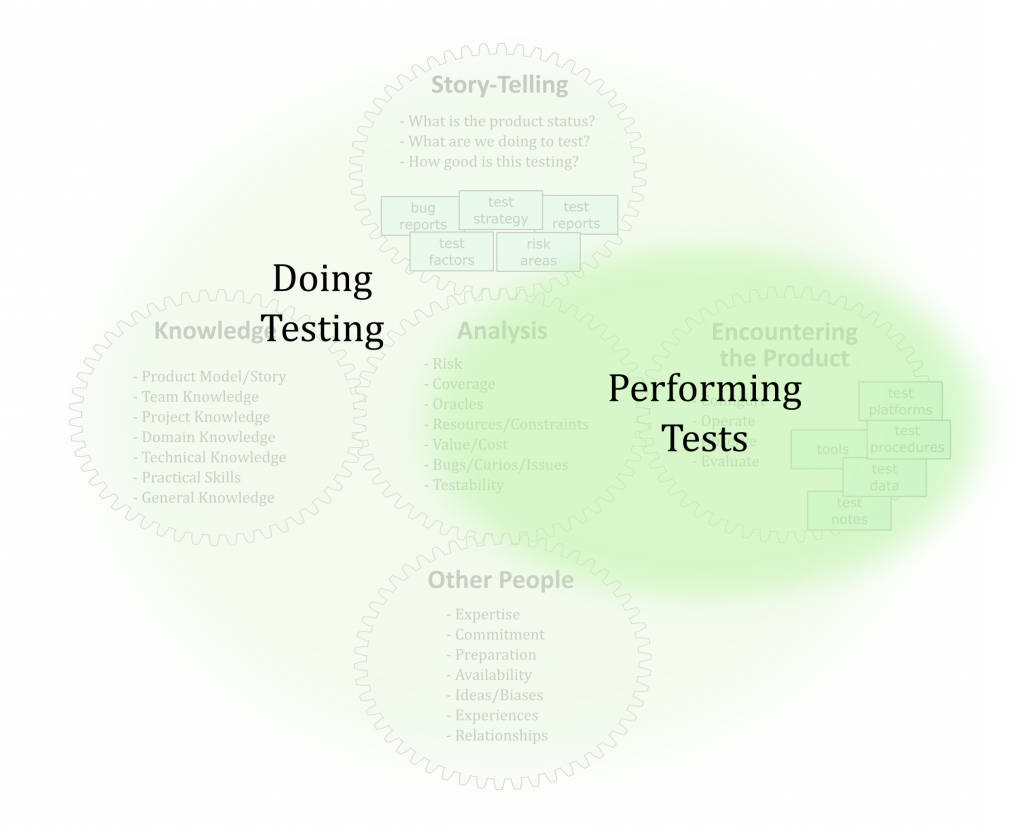

It is very important to understand that doing testing involves more than just performing tests. Doing testing is a process that usually remains very exploratory– even in projects where performing tests becomes formalized.

As you test informally, you will see opportunities to formalize your testing to some degree. You will decide upon specific techniques to construct test data and perform specific experiments. Some of these you will encode into a form that affords automated output checking. Still, your formalization is not the end of your exploration. After you have a strong idea of what the product ought to be and how you want to test it, you still explore to find hidden problems and unknown risks. Even in the midst of a formalized test procedure, when you find any indication of a possible problem, you must explore to discover the extent of that problem. All along, throughout testing, you keep your eyes and mind open for new surprises.

Formality is a heuristic for maximizing the integrity of the test process: that means it helps you better understand and describe what testing you have done. It does this partly by sacrificing flexibility and therefore some forms of variety, which means formal testing risks being more shallow than informal testing; less likely to uncover some kinds of obscure bugs. This is why all good testing must remain exploratory to some degree. Testers may push buttons or invoke code, but that’s not the value testers provide to the project. All good testing needs a thinking, socially competent human in the loop, steering the process and managing its formality.

Although formality may be needed to achieve our goals, in the presence of complexity, uncertainty, and change, exploration is essential.

Testing is Not Proving; Verification is Not Testing

No matter how formal your testing gets, you cannot use testing to “prove” that software “works.” If you follow a specific procedure and the product behaves exactly as you were certain that it would behave, that is a demonstration. This might be reassuring, and it might help you explain what the product is supposed to do. It is certainly a part of a testing process, but please don’t call it a test. Calling that a test encourages people to think that testing is really just about thoughtlessly mashing buttons. I suggest calling that an output check and reserving the word test for the world of thinking people.

Performing checks (sometimes called “verifications”) is certainly part of testing; it’s a fundamental tactic of testing. But the full process of testing involves modeling, sensemaking , and reasoning about product risks and making social judgments about them , all of which are beyond the scope of mere checking. Testing is an empirical process; it gathers evidence about the world. This process is subject to all the limitations of evidence that philosophers have cited since Socrates and Sextus Empiricus . Proof, on the other hand, belongs to the world of mathematics; to formal systems and models. Real life systems, even computer systems, are far more complex and far less certain than pure mathematics. Real life doesn’t necessarily behave according to mathematical models, because such models might be wrong or the data about the system incomplete . Even if meteorologists had a perfect model of how a hurricane works, they would still need a vast, impossible amount of data and computing time to provide a completely accurate prediction of its behavior.

Furthermore, models that can be used to prove software correct deliberately leave out the complex, human, social aspects of the systems they represent. Yet new testers often speak casually about proving that their product works. This is a seductive but poisonous way of thinking. Here’s why it’s wrong, in one phrase: can is not the same as will. If someone drinks a lot of alcohol and then drives home safely in a car, that only proves it is possible not to be injured or injure someone else while drunk driving. It does not verify that everyone, always, will be safe. It doesn’t prove it’s a good thing to do. It is only one experience. Similarly, when you experience that your product doesn’t fail in the five minutes that you look at it, that is no “proof” that it “works” because it may have been failing in a way you didn’t see, or it may fail five minutes from now. You can’t know. Here’s another key phrase: Seeing no problem is not the same as seeing that there are no problems. Just because you fail to detect a bug doesn’t mean it wasn’t there in front of you, fully able to be detected. Or it may have been very good at hiding from you, yet not so good at hiding from your users. Therefore, it is wrong to make sweeping statements about what is true about a product based on the assumption that what you see is all there is .

Formal verification of properties in computer programs does exist, but it applies in relatively narrow situations, applying only to certain kinds of software, depending on a long list of assumptions, and depending on the correct operation of verification tools that themselves could have bugs. Formal verification is a nice tool to augment testing, or limit the need for certain kinds of testing. It cannot replace testing entirely. Testing is not a matter of proof, then. It’s a matter of collecting evidence and assessing that evidence. This certainly involves the verification of specific facts about the product, but is far more than that.

Exploratory testing, like formal testing, is a systematic evidence gathering and assessment process, but never proves that a product works.

My blog posts relating to exploratory testing.