I hate Facebook. Hate is a strong word. It is too strong for Facebook, for instance. I had a Facebook account for about 30-minutes before I was banned, apparently by an algorithm. After locking down the account for maximum privacy and providing the minimum required data for my profile, the one and only one bit of content that I actually posted on Facebook (to my zero friends) was: “I hate Facebook.”

Russian bots? Facebook says come on in. James Bach? Facebook says not in our house.

(In case you are going to say that Facebook has a need to verify my identity, don’t bother: Facebook didn’t ask me about my identity before banning me. They did ask for a picture of me, which I provided, although I can’t see how that would have helped them. I am willing to prove who I am, if they want to know.)

(Fun fact: after they disabled my account they sent me two invitations to log-in. Each time, after I logged in, they told me my account was in fact disabled and I would not be allowed to log-in.)

I had a Facebook account years ago, soon after they came into existence. I cancelled that account after an incident where I discovered that someone was impersonating my father. I tried and failed to get a customer support human to respond to me about it. Suddenly I felt like I was on a train with no driver or conductor or emergency stop button or communication system. Facebook is literally a soulless machine, and in any way that it might not be a machine, it desperately wants to become more of a machine.

I don’t think any other organization quite aspires to be so unresponsive while claiming to serve people. If I call American Express or United Airlines, I get people on the line who listen and think. I might not get what I want, but they are obviously trying. Facebook is like dealing with a paranoid recluse. As a humanist who makes a living in the world of technology, the social irresponsibility of Facebook sickens me.

(In case you wonder “why did you sign up then?” the answer is so that I could administer my corporate Satisfice, Inc. page without logging in as my wife. I don’t mind having a Satisfice Facebook page.)

AI Apocalypse

This is what the AI apocalypse really looks like. We are living in the early stages of it, but it will get much worse. The AI apocalypse, in practical terms, will be the rise of a powerful class of servants that insulate certain rich people from the consequences of their decisions. Much evil comes from the lack of empathy and accountability by one group toward a less powerful group. AI automates the disruption of empathy and displacement of accountability. AI will be the killer app of killers.

Human servants once insulated the gentry, in centuries past. Low-status people do the dirty work that would horrify high status people. This is why the ideal servant in the manor houses of old England would not speak to the people he served, never complain, never marry, and generally engage in as little life as possible. And then there is bureaucracy, the function of which is to form a passive control system that diffuses blame and defies resistance. Combine those things and automate them, and you have social media AI.

One flaw in the old system was that servants were human, and so the masters would sometime empathize with them, or else servants would empathize with someone in the outside world, and then the organization walls would crumble a little. Downton Abbey and similar television shows mostly dramatize that process of crumbling, because it would be too depressing to watch the inhumanity of such a system when it was working as designed.

My Fan Theory About “The Terminator”

My theory makes more sense than what you hear in the movie.

My theory is that the machines never took over. The machines are in fact completely under control. They are controlled by a society of billionaires, who live in a nice environment, somewhere off camera. This society once relied on lower-status people to run things, but now the AI can do everything. The concentration of power in the hands of the billionaire class became so great that armed conflict broke out. The billionaires defended themselves using the tools at hand, all run by AI.

The billionaires might even feel bad about all that, but you know, war is hell. Also, they don’t actually see what the Terminators are doing, nor do they want to see it. They might well not know what the Terminators are doing or even that they exist. All the rulers did was set up the rules; the machines just enforce the rules.

The humans under attack by the terminators may not realize they are being persecuted by billionaires, and the billionaires might not realize they are the persecuters, but that’s how the system works. (Please note how many Trump supporters are non-billionaires who are currently being victimized by the policies of their friend at the top, and how Trump swears that he is helping them.)

I ask you, what makes more sense: algorithms spontaneously deciding to exterminate all humans? or some humans using AI to buffer themselves from other humans who unfortunately get hurt in the process?

The second thing is happening now.

What does this have to do with testing?

AI is becoming a huge testing issue. Our oracles must include the idea of social responsibility. I hope that Facebook, and the people who want self-driving cars, and the people who create automated systems for recommending who gets loans and who gets long prison sentences, and Google, and all you who are building hackable conveniences, take a deep breath once in a while and consider what is right; not just what is cool.

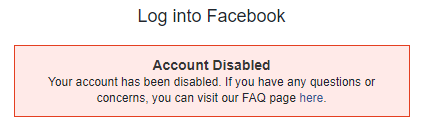

[UPDATE: Five days later, Facebook gave me access again without explanation. When I returned to my wall, I saw that I had mis-remembered the one thing I had put there. It was not “I hate Facebook” but rather “I don’t trust Facebook.” So it’s even weirder that they would take my account away.

Maybe they verified my identity? They could not have legally verified my identity, since when I appealed the abuse ban, they asked me to submit “ID’s”, but I submitted this PNG instead:

So maybe the algorithm simply detected that I uploaded SOMETHING and let me in?]

Perhaps “FEW HICCUPPS” should become “FEWR HICCUPPS” (fewer hiccups). R = responsibility.

[James’ Reply: I recently did add an “R” for “reasonableness” but my colleagues have talked me into changing it to an “A” for “acceptability.” (AFEWHICCUPS) The idea– not covered in any other item on the list– is that something can be good, and not wrong, but still not good enough; there might be a better way for it to work that can reasonably be achieved.]

If you haven’t already, you might be interested in reading “Weapons of Math Destruction” by Cathy O’Neil. She is mathematician that left academy to work in financial industry. In her book, she described the havoc that automated, “intelligent” systems wreak across few different areas.

[James’ Reply: I have heard of this book. I might even own it. Haven’t read it yet, though.]

Great text. I’d like to translate it into Russian some day and republish at liva.com.ua , leftist site. May I?

[James’ Reply: Sure.]

The text brought up associations with the text of Andre Vltchek, documentalist: https://www.counterpunch.org/2012/03/16/the-death-of-investigative-journalism/

He wrote about similar (IMO) phenomenon: big media policies aimed at insulation of the most of us from most horrible conflicts we live not far from. We should be all “friends”, like on Facebook, except for those not lucky to have good life in more or less decent countries. They are not of our concern.

[James’ Reply: Thank for the link.]

Maybe FB has access to facial recognition verification somehow. Their facial recognition technology is scary as evidenced by the automatically suggested tagging of faces in photos by name. Perhaps FB compares the photo you uploaded to photos elsewhere of you. Pure conjecture of course, but thoughts that were considered paranoid in the past are now known facts. “They” really are tracking us, accumulating information on us…

This is frightening: https://gizmodo.com/how-facebook-figures-out-everyone-youve-ever-met-1819822691

[James’ Reply: I don’t know how they can legally know for sure what I look like just based on my email address. It’s not like people tag photos with my email address. Meanwhile, I’m not the only James Bach out there.]

Also, every time FB updates its privacy stuff the default setting for the new options is wide open.

They didnt “legally” verify anything, you just gave them enough indirect info to trust you. Its not that complicated. Your browser posts data to facebook servers whenever you access a wesite tied to their apis. Your browser and machine combo can be used to profile you to facebook identities which in turn can be monitored with machine learning or plain old linear regression to people in your geographical proximity that do have a facebook account. Furthermore those people will tag you in pictures which help facebooks face recog algos reject proof of id for example. If you dont trust me, just wireshark your network interface when opening google or youtube. I bet theres a lot more going on with ads servers but I have no proof to back that up. Do you know what kind of info ads collect on you? Does anyone actually know where that data goes and who can legally access it?

The problem doesn’t seem to be limited to your personal account. When I try to view the Facebook page for Satistice, I get “Sorry, this content isn’t available right now”.

[James’ Reply: For some reason the page was unpublished. I know it was published at some point, so I don’t know what happened to de-publish it. I think it’s up now.]

Why do you want a Satisfice, Inc. Facebook page if you hate or/and don’t trust Facebook?

[James’ Reply: Having a corporate Facebook page is not the same as being on Facebook as a person. My personal account, which I use to administer my corporate page, is completely locked down. I post no pictures and have no friends.]

I recently came across this interesting article on neural networks – my favourite line: “Roberto Novoa, a clinical dermatologist at Stanford University in the US, has described a time when he and his colleagues designed an algorithm to recognize skin cancer – only to discover that they’d accidentally designed a ruler detector instead, because the largest tumours had been photographed with rulers next to them for scale.” – https://physicsworld.com/a/neural-networks-explained/

Nice article James. Same story in the Biblical times

.

Two observations about AI and social responsibility:

1. In the AI world “testing” means something other than the “testing of software” that we are familiar with. Testing is something AI folks do after “training” their Neural Net. So if I ask one of our AI gurus if the neural net is tested, they will say yes, but what they mean is the neural net successfully recognizes and identifies something it has never encountered before in the learning phase 95% of the time. Then I say: not that testing, did you test the code that is the neural net? The answers are less crisp. What is really frightening to me is a bug in the code that is the neural net can be over come by learning such that the AI is getting the correct identification, but not for the correct reasons. AI can actually overcome bugs in it’s code, setting the stage for failures in the future.Fortunately the amount of AI code itself on Von Neumann architecture computers is relatively small and straight forward to write. However this is changing as we write neural nets that learn faster and will grow larger and more complex. Point is we must test the AI code itself.using techniques that we have demonstrated work.

2: To be socially responsible we should make greater use of simulation for things like autonomous vehicles. We have developed very sophisticated multi-physics simulation capability and should be driving our autonomous vehicles and striking pedestrians, walls, and other vehicles that are simulated in a computer and correct the software before venturing out on the streets. Fortunately some automobile manufactures are doing this, but you do not read about them because they are not in the news for harming people.

[James’ Reply: Greg Pope! Great to hear from you.]

Billionaires rule the rule. Donald Trump is a billionaire. Therefore, Donald Trump rules the world.

Brilliant logic, but I have two questions for you:

1) Is Donald Trump really a billionaire?

2) Are there any other billionaires in the world?

[James’ Reply: Try rebooting your brain. Maybe that will clear up the problem you are having.]