Stockton Rush is dead: to begin with. There is no doubt whatever about that. He was killed in a spectacular implosion of the Titan submersible during a dive to the site of the wreck of the Titanic, on Father’s Day, 2023. This implosion is proof, beyond any reasonable rational doubt, that Stockton Rush’s engineering judgment was wrong.

[Note: I originally used the word “conceivable” instead of “reasonable,” but someone on LinkedIn made the point that sabotage is conceivable, and I agree that it is. The same commenter claims that the conceivability of sabotage means we must reserve judgment. Except by that logic we must also reserve judgment on the Hindenburg, the Vasa, or the Titanic itself. Maybe they all were caused by sabotage. We can’t be perfectly sure, right? In this case, I think judgment deferred is judgment evaded.]

This is relevant to us because if you are reading my blog you have an interest in the industry and culture of testing. We testers are often ridiculed or sneered at, patronized, or ignored, when we present evidence about risk. It has been reported that Rush fired a tester over a disagreement about risk and reasonable testing. There were lawsuits.

But he was wrong, and we know that not just because of the implosion, but also the circumstances surrounding it:

- The vessel was a one-of-a-kind prototype. (therefore: no comparable craft existed from which safety data could be obtained)

- The vessel had made no more than about 50 dives in its entire existence, and only five previous dives to the Titanic. (therefore: it was practically new, not old and worn out)

- The vessel was carrying civilian customers, including a 19-year old man who was not an engineer or even an engineering student. (therefore: people were on board who could not have been equipped to evaluate the engineering risks even under the best case scenario)

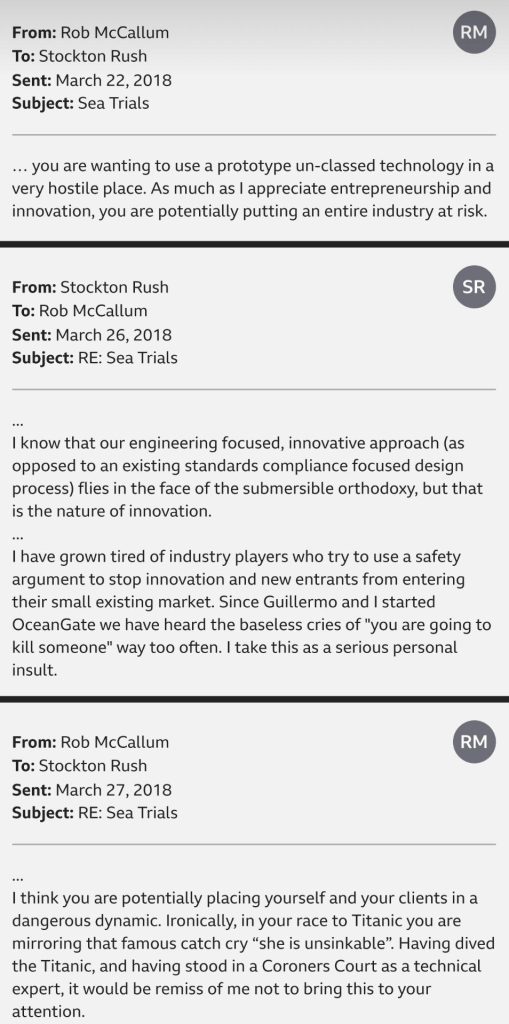

- Stockton Rush had been warned repeatedly by people in his own world. These warnings were rebuffed. (therefore: people who designed and used deep sea submersibles disagreed with him, not just random outsiders)

This vessel was not safe, and it was not even close to being safe. It was a bomb ready to detonate from its first dive. Although no one could have known for certain that it was as dangerous as it turned out to be, my point is that Rush’s claim that it was safe was at best an act of gross negligence, and at worst an act of fraud. Given that he perished in his own invention, I lean toward negligence caused by magical thinking.

Again, this is not just a feeling I have– we know that this is true. Titan is in pieces at the bottom of the ocean, because the ocean did crush it, because it was not strong enough to withstand the forces of the ocean and because its design did not permit any warning of failure. This was allowed to happen because its inventor falsely thought it was strong enough. He thought it was strong enough because he lacked evidence that it was weak. He lacked that evidence because he did not perform sufficient testing that might have given him that evidence. He didn’t perform that testing because it was expensive and time-consuming and because he falsely believed he had already mitigated the risks. He protected this false belief by systematically demeaning and dismissing qualified people who pointed out critical uncertainties.

He was wrong, now he’s gone. So what?

When knowledge is gained at the cost of peoples’ lives, it is moral and proper to share it wider and to learn it all the better for price paid. So, when I came across this post from Stockton Rush about the safety of Titan, I was moved to do write a post of my own in reply. With the cold objectivity of recent events in mind, some of the rhetorical tricks that he used are easier to answer.

The post addresses the questions of why the sub was not “classed,” which is to say certified.

Classing may be effective at filtering out unsatisfactory designers and builders, but the established standards do little to weed out subpar vessel operators – because classing agencies only focus on validating the physical vessel.

This is a non sequitur; a straw man fallacy. Yes, testing and certifying a physical vessel is not a guarantee against all forms of safety problems. But no one says that it is. What classing does is two-fold: it is a testing process meant to uncover safety problems of certain important kinds; and it is a form of social insurance that protects a company against accusations of gross negligence or reckless disregard for human life.

They do not ensure that operators adhere to proper operating procedures and decision-making processes – two areas that are much more important for mitigating risks at sea.

Perhaps that is true. But there is a very specific and important failure mode that has almost nothing to do with procedures and decision-making processes: the catastrophic and instantaneous implosion of a pressure hull at extreme depths. It is just that failure mode which is addressed by certifying the hull of a sub for the kinds of depths you want to explore.

The vast majority of marine (and aviation) accidents are a result of operator error, not mechanical failure. As a result, simply focusing on classing the vessel does not address the operational risks. Maintaining high-level operational safety requires constant, committed effort and a focused corporate culture – two things that OceanGate takes very seriously and that are not assessed during classification.

This is the fallacy of false choice. It’s not a matter of choosing whether to have a constant committed effort to safety OR to get your unique hull properly tested. Do both!

When OceanGate was founded the goal was to pursue the highest reasonable level of innovation in the design and operation of manned submersibles. By definition, innovation is outside of an already accepted system. However, this does not mean that OceanGate does meet standards where they apply, but it does mean that innovation often falls outside of the existing industry paradigm.

Stockton is engaging in the fallacy of equivocation, here. That’s when you change the meaning of key terms in the middle of your argument. First, he uses term innovation and claims that it means going outside an already accepted system. This is easy to agree with. To innovate is to do something new, and if it is new, then it can’t have been accepted yet, right? Yes, technically, that’s true. But then he applies that to safety standards, and implies that if we like innovation, we must therefore suspend safety standards because they don’t mean anything (i.e. “outside of the…paradigm”).

If we agree that innovation means doing something new, does that require us to agree that established safety standards and practices don’t apply? No! It means that people who innovate in the area of life-critical systems have an extra high burden of testing and analysis if they want their innovation to be accepted by society. If the existing standards don’t apply, then you must invest in new and better standards. You can’t just flout the whole process! Well, of course you can flout it, but you can’t call that reasonable engineering.

While classing agencies are willing to pursue the certification of new and innovative designs and ideas, they often have a multi-year approval cycle due to a lack of pre-existing standards, especially, for example, in the case of many of OceanGate’s innovations, such as carbon fiber pressure vessels and a real-time (RTM) hull health monitoring system. Bringing an outside entity up to speed on every innovation before it is put into real-world testing is anathema to rapid innovation.

This is all true. What it means is that rapid innovation in life critical systems might be possible in creating a prototype. But when it comes to bringing a safe product to market, you may have to go slow. That’s because there may be no comparable engineering data or relevant engineering experience that could make rapid certification possible.

One way around this is to dramatically over-engineer your product. The first iron bridges used a lot more iron than later ones (e.g. the Britannia Tubular Bridge). Stockton could have designed a sub to withstand 24,000 foot depths (twice as deep as Titanic lies), but that would have been incredibly bulky and expensive. The whole point of innovating was to create a large deep diving submersible for a price he could afford.

For example, Space X, Blue Origin and Virgin Galactic all rely on experienced inside experts to oversee the daily operations, testing, and validation versus bringing in outsiders who need to first be educated before being qualified to ‘validate’ any innovations.

Inside knowledge is important. But it comes along with a loss of critical distance. Critical distance is the difference between two ways of thinking. Insiders tend to think alike. They share the same incentives. They bond against the pressures of the outside world. Extended close contact in a team is toxic to critical distance. And that means people who are supposed to be thinking about safety have a hard time making that case– their hearts aren’t in it, and their minds too readily accept the prevailing opinion.

If you want good testing, you must cultivate critical distance. You need outsiders for that. You need testers who work under a different incentive structure than the builders do. You need testers who are rewarded when they find trouble.

As an interim step in the path to classification, we are working with a premier classing agency to validate Titan’s dive test plan. A licensed marine surveyor will witness a successful dive to 4000 meters, inspect the vessel before and after the dive, and provide a Statement of Fact attesting to the completion of the dive test plan.

That’s it? That’s not enough testing! We know this for four reasons at least. One is that industry experts strongly objected to this, and urged the company to obtain proper certification, regardless of any other reason I may suggest. A second reason is that you must test beyond the depths that you intend to routinely operate. Engineering requires an added safety factor, especially when there is so much we don’t know about the behavior of the unique carbon-fiber hull. A third reason is that they should really test to the point of destruction if they want a hope of understanding the full dynamics of the hull. Finally, there is this reason: we know the implosion occurred despite the sub not even being near the depth for which it was built. It had already been deeper, some number of times (although not more than 50 times total, in its entire existence). Yet it imploded. This proves that the hull either always had a substantial probability of failing, even at shallower depths, or that it had been degraded by previous dives in ways that turned out to have been invisible.

In addition to designing and building an innovative carbon fiber hull, our team has also developed and incorporated many other elements and procedures into our operations to mitigate risks.

OceanGate’s submersibles are the only known vessels to use real-time (RTM) hull health monitoring. With this RTM system, we can determine if the hull is compromised well before situations become life-threatening, and safely return to the surface. This innovative safety system is not currently covered by any classing agency.

No other submersible currently utilizes real-time monitoring to monitor hull health during a dive. We want to know why. Classed subs are only required to undergo depth validation every three years, whereas our RTM system validates the integrity of the hull on each and every dive.

The efficacy of the RTM system had also not been tested! To fully test such a system, you have to bring the hull to a failure point and verify the monitoring system performs its job. They never did that, because we already know they never tested the hull to failure.

And the other thing we know is that the RTM system either failed to detect the weakening of the hull, or else the operators of the vessel didn’t pay attention to it.

Our risk assessment team looks at the entire expedition and completes a detailed, quantified risk assessment for each dive. The risk assessment takes into account 25 specific factors that can influence a dive outcome. Using that information, a dive plan is written to mitigate against these known risks. These risk factors include things like weather forecast, sea state, sub maintenance, crew fatigue, predicted currents, dive site experience, recent dive history, schedule expectations, crew experience, and more. In this assessment, the actual operational risks are almost always concentrated on the surface operations not the subsea performance of the submersible.

None of that mitigates the risk of implosion of an untested hull. This is like touting the safety deck chairs on the Titanic.

Meanwhile, the hull did implode.

One Good Way to Wake People Up To the Importance of Testing…

…is to die. Factory safety, fire codes, railway safety systems, aircraft safety, have all famously and grimly improved directly as a result of mass death.

We testers must collect stories of disasters, big and small, so we can wake our clients up to risks around us. We must tell these stories. The best ones for you will happen within your own company. Record them. Safety culture is one part folklore.

It wasn’t wrong to innovate. It was wrong to bring paying customers into an under-tested vessel without disclosing the full history of the safety debate, at the very least. It was wrong to throw up a smokescreen about innovation and total risk management as if that has anything to do with the unaddressed risk of sudden implosion.

There are always risks in life. That’s not the issue. The issue is willful ignorance and irresponsible behavior.

Excellent post, James. I can imagine that people will cry, “too soon!” – but these lessons about hubris are essential and now is a good time.

Harsh but meaningful.

One factual error… you state testing at 4000 M is not at the depths the craft would operate. That is false. The Titanic is at 12,500 ft (3,800 meters).

At 3,800 meters, in salt water exterior pressure would be 5,500 PSI, 286,000 mmHg, 376 atmospheres.

During the 4,000 meter test in salt water the craft would have experienced 5,800 PSI, 301,000 mmHg, 396 atmospheres.

This is still not a 2x safety factor and does nothing to determine the effects of multiple dives on carbon fiber construction.

[James’ Reply: I stated that testing at 4000m is not testing beyond the depth of the Titanic. This is because 4000 is roughly at that depth of the Titanic. It is technically beyond, but not by enough. A typical engineering practice is to engineer for 50% beyond required strength. But in this case let’s say 20%. That would require dives to 4560 meters.]

Metal fatigue is different from carbon fiber fatigue. Your overall prescription for destructive testing is wise. At minimum I would have liked to see at what depth the craft ruptured in the Marianas Trench, with full recovery of the wreckage, then repeat with a craft that had ten 3 hour trips below 4,000 meters before the destructive test, then repeat with a craft that had fifty 3 hour trips below 4,000 before the destructive test.

The cost would be high, but the test could show progressive impact of repeated strain at a depth beyond planned commercial operations. The cost would certainly be less than loss of life and corporate reputation.

[James’ Reply: Yes. Also, this is the first ever implosion of a deep sea submersible operating within its intended depth envelope. Therefore, the whole industry is now tainted.]

Even basic (low risk) engineering requires repetitive testing, for example a company in my area manufactures taps – faucets for those in the US – they have a test lab where their product is tested to replicate repeated usage (on, off, running full blast, running at drip etc) for a number of repetitions far beyond normal use would hit in quite a lot of years and also test to destruction to identify expected lifespan. Only when they’re satisfied does the design go to market. That’s a domestic item, low risk, easily replaceable. No one’s going to die if a £50 tap fails after a year’s usage but still they test to destruction. Software testers also test to way beyond normal parameters to identify the breaking point.

It seems that the testing on this project was “can I make that dive” rather than “if I make that dive 5 times is there damage incurred, 10 times, 50 times, if I make the dive and the hull shows signs of stress at what point do the warning bells sound, is that soon enough for a safe retreat, what point is there no return from imminent failure… etc”.

Innovation is essential, but if you’re using a product commercially you simply have to thoroughly test.

The best time to start investigating the cause of a failure is as soon as possible after that failure occurs. Investigation requires asking questions as well as looking at data and we all know our interpretation of something is different on day 1 to when asked a few weeks or months later.

Excellent post, really hope lessons are learnt from this incident.

Another factor when operating at the so called ‘leading edge’ of technology is that of tool-lag – it takes a while for the tools we use to test something to become available in any marketplace (the tool vendors need to be assured that the technology is viable before investing in the development of their product).

We have long understood the perils of metal fatigue and now have a whole raft of tools and techniques to detect the smallest problem in metal-based products (from x-rays to ultrasound). But carbon fibre? To my knowledge we don’t have the tools developed yet to detect developing issues – this surely was another big risk factor. The RTM was one angle but we may never know if the hull was being built with defective materials in the first place.

[James’ Reply: Excellent point.

Also, my understanding is that the RTM system operated based on microphones. This seems strange because in a documentary of one of the few other dives Titan did on the Titanic, he briefs the passengers by telling them that it’s normal for the submersible to make noises during a dive. This sounds to me like the normalization of risk. But it also seems like the RTM system might have been giving results that were dismissed.]

A few years ago I was working on a project where we were developing an emergency communications system based on a new digital standard.

The certification bodies were well behind where they needed to be, and the test procedures they were specifying were inadequate – any existing digital radio could have passed the tests.

We ended up having to take a risk. We invested heavily in building the tools we needed to properly test the new radios. In parallel we partnered with the certifying bodies, and with our competitors to make sure that the certification standards we were being asked to comply with meaningful, and relevant.

This involved some commercial risk. The test tools we built were expensive, and we had to supply information to competitors about what we were doing and why. Some of those competitors were much better resourced than we were. But this was the responsible thing to do. Lives actually depended on our equipment being able to work with our competitors equipment and vice versa.

Sometimes red tape is there for a reason, but an even better protection is a deep and respectful engineering culture – which seems to have been sorely lacking at OceanGate

Might be all true.

But…. if I say:

The vessel was hit by a beam of an alien UFO..

Can you proof me wrong then?

[James’ Reply: Well, yes, I can. Here’s my proof.

1. We can reject the truth of a proposition if any of the following are true:

a. Self-contradiction: The statement contradicts itself.

b. Incoherence: The statement contradicts other propositions we accept as fact.

b. Obscurity: We cannot know what the proposition means.

c. Ambiguity: The proposition has many potential meanings and at least one of them is definitely false.

2. Your scenario seems obscure to me. What do you mean by “alien?” What do you mean by “beam?”

3. If you were to define your terms more precisely, you would run into the coherence problem: it has not been established that there are such things as “beams” or “aliens.” And those ideas seem to violate our established and tested ideas about physics.

4. Therefore, we can say your proposition is wrong.

But let’s say we accept that you can amend the proposition to evade breaking any laws of thought or physics. Fine. Then my response is that I am not talking about “proof” in this blog post. I’m talking about “reasonable rational doubt,” not certainty.]

Plus – That which is asserted without evidence, can be dismissed, without evidence.

It’s a useful heuristic.

Okay, the laser beam from a UFO was a bit of a joke or exaggeration. But it seemed it was actually hit by thunder lightning once….

(sorry in Dutch)

https://www.telegraaf.nl/nieuws/1440561451/nieuwe-theorie-over-catastrofe-met-titan-duikboot

Another excellent and enlightening post. I have been following your post for almost 12 years now.

As far as this incident is concerned its extremely unfortunate coz Stockton totally believed that his product was good and fail proof or else he would not have tried it himself.

The immediate solution I can think of is we need some regulations and standards for minimum over engineer percentage for life threating products like say 40 % or 50 %. Like these kind of products should be over engineered atleast 50% or above to ensure we are always in safe zone and loss of lives is prevented.

[James’ Reply: There already are standards and laws about this. Stockton avoided them by not operating in the United States, and not categorizing his passengers as passengers.]